Build a Truly RESTful API using NodeJS & ExpressJS-The Ultimate Tutorial

— REST API, Tutorial — 130 min read

Introduction

The REST architecture for APIs was introduced by Roy Fielding as a part of his doctoral dissertation and has since revolutionized the web and the way that web applications communicate with each other. If you have no prior knowledge about REST, then the REST architecture basics blog post is a good starting point where we discuss the foundational concepts of the REST architecture and what APIs, Web APIs and REST APIs are.

A truly RESTful API is one that adheres to the 6 REST architectural constraints.

This tutorial aims to equip you with all the foundational concepts, guidelines, standards, conventions, tips and tricks that will enable you to design a truly RESTful API.

We'll learn all of this with the help of a step-by-step tutorial which will walk you through building a REST API for a very minimal note-taking app called Thunder Notes⚡. We'll also be looking at examples of real-life APIs designed by Github, Twitter, Slack, etc. to get an idea of how the big names out there design their APIs.

This may sound a little cliched but this is the tutorial I wish someone had written and I had read, before I implemented my very first REST API.

Here is the list of contents we'll be covering as a part of this tutorial.

- HTTP Request Methods or HTTP Verbs

- HTTP Response Status Codes

- Identification of Resources

- Setting up the Project

- Starting the API server

- Exploring the starter files

- GET

- POST

- PUT

- PATCH

- DELETE

- Hypermedia As The Engine Of Application State(HATEOAS)

- Authentication and Authorization

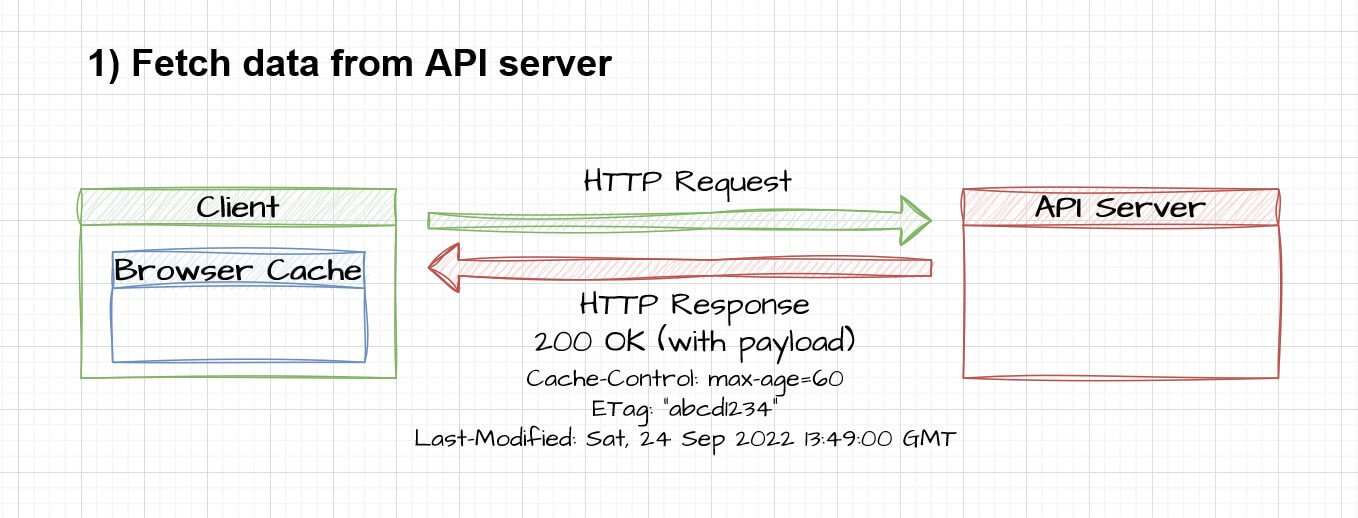

- Caching

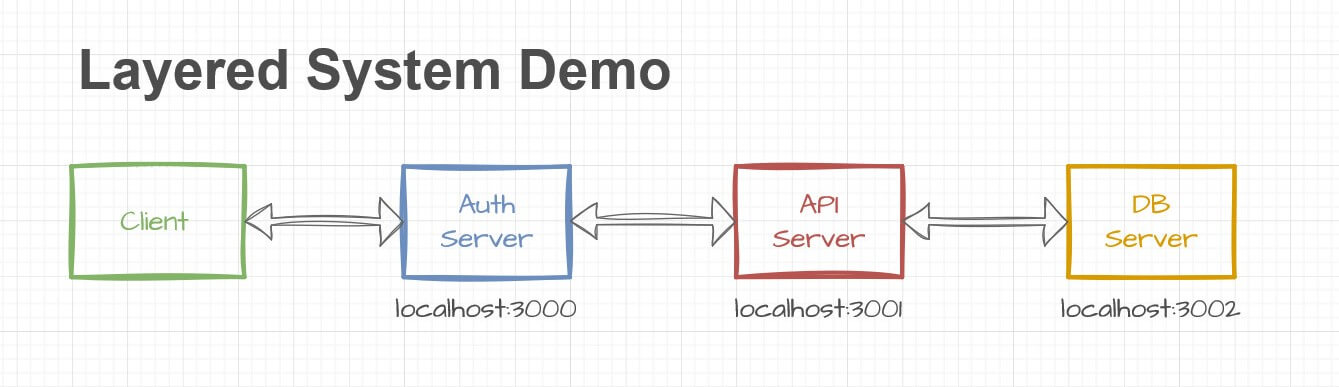

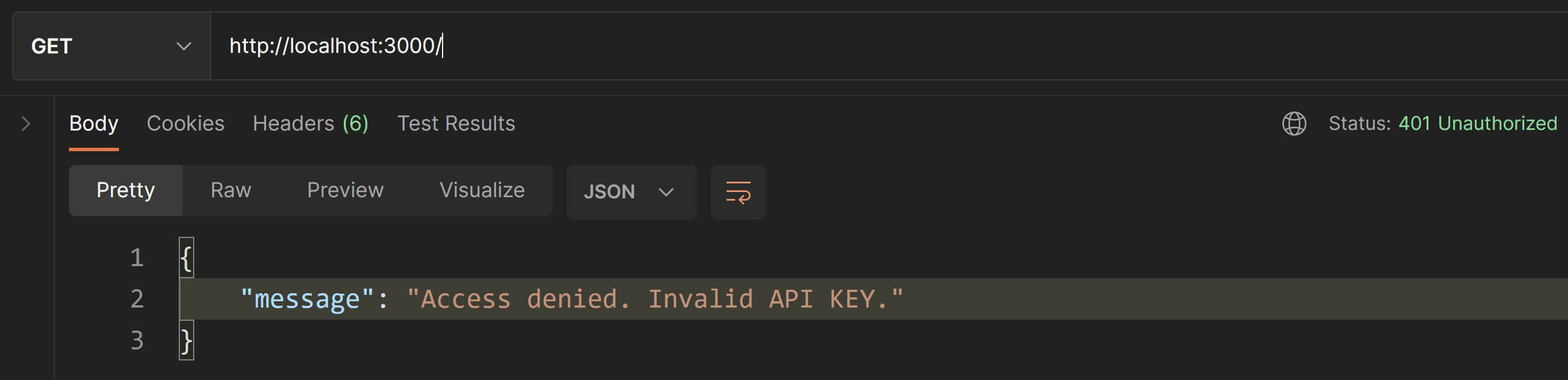

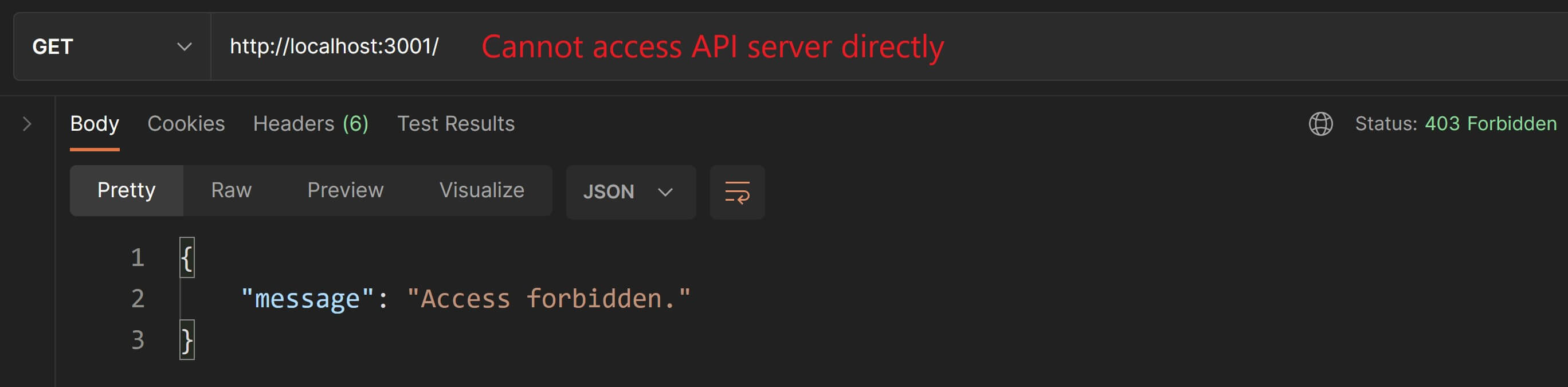

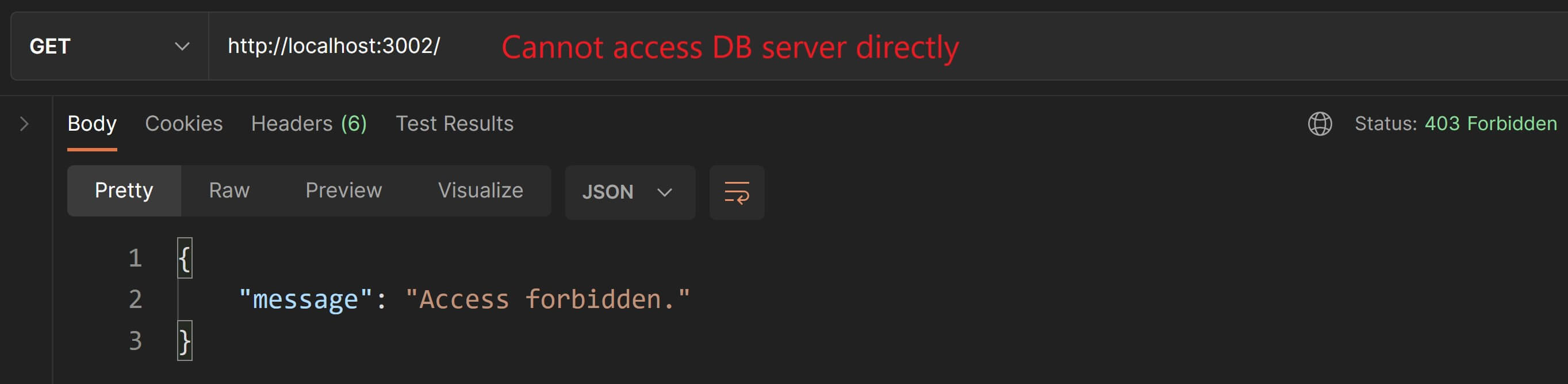

- Layered System

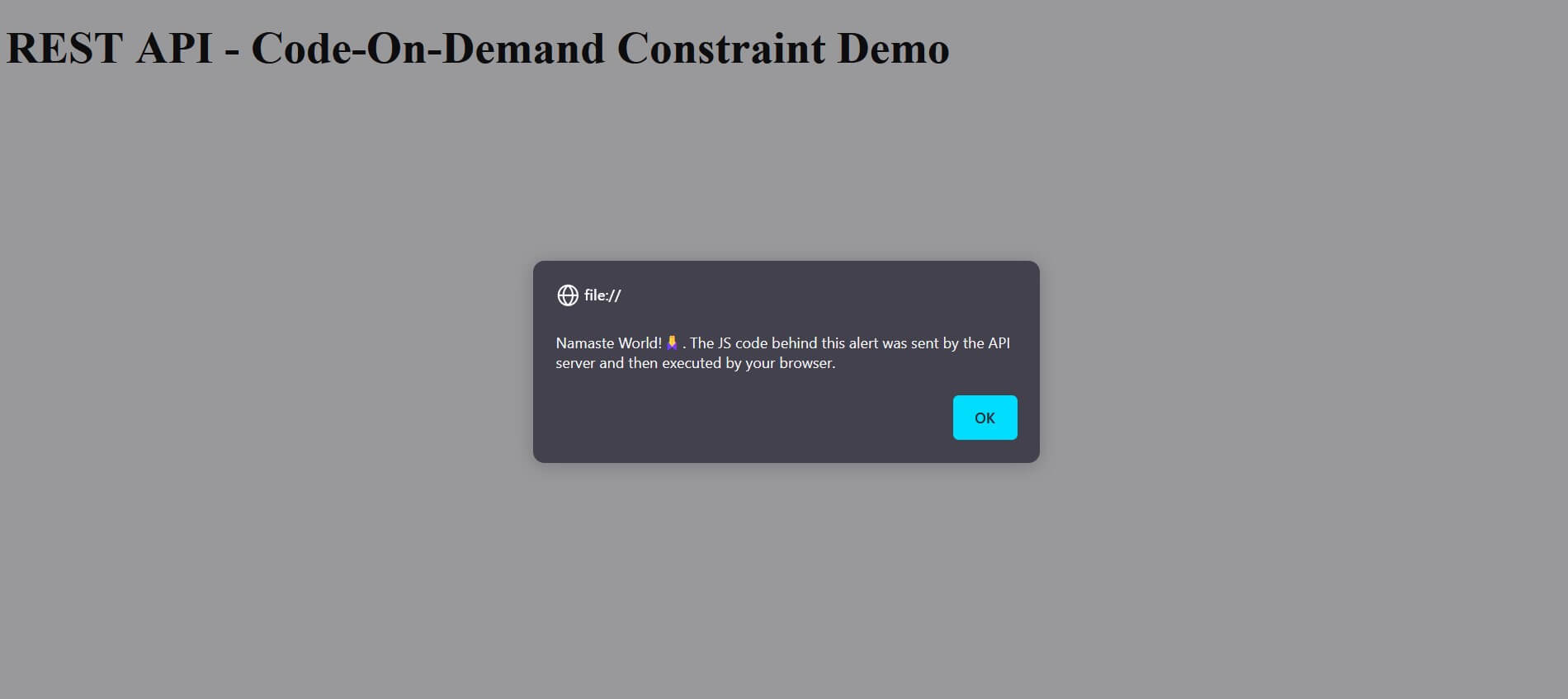

- Code-On-Demand

- Versioning

- Pagination

- Searching and Filtering

- Sorting

This is a comprehensive list and the article is probably big enough to qualify as a course which is intentional because I want this tutorial to serve as a one-stop solution for everything you need to know in order to build REST APIs that make your heart swell with pride💖.

Let's have a look at some of the concepts and terminologies that you need to know before getting started with the actual design.

HTTP Request Methods or HTTP Verbs

The HTTP specification defines several HTTP request methods that indicate a certain action to be performed on a requested resource. Since they represent an action, they are also referred to as HTTP verbs.

Some of the main HTTP verbs we'll cover in this tutorial are:

- GET

- POST

- PUT

- PATCH

- DELETE

Safety and Idempotency

Safety and Idempotency are properties of HTTP methods.

A safe HTTP method is one which does not alter the server state meaning we can only perform a read-only operation with it.

An idempotent HTTP method is one which has the same effect on the server state even if it is used for making multiple identical requests.

Since safe methods do not have any effect on the server state, all safe methods are inherently also idempotent.

Let's see how these properties are associated with our selected HTTP methods. We'll look more into the rationale behind some of these associations as we progress with the tutorial so don't worry if you don't completely understand what this means as of now.

| HTTP Method | Safe? | Idempotent? |

|---|---|---|

| GET | Yes | Yes |

| POST | No | No |

| PUT | No | Yes |

| PATCH | No | No |

| DELETE | No | Yes |

You need to keep these associations in mind while using HTTP methods for our API endpoints because clients or API consumers will expect their HTTP requests to behave as per these properties.

In real-world applications, API services may need to log messages or perform some statistical updates even for safe methods. This may be debatable but is generally allowed since this is not an unintended side-effect but an intentional feature implemented by the API developers on the server and does not concern the client.

HTTP Response Status Codes

While HTTP verbs enable the client to indicate to the server what operation needs to be performed on the resource, HTTP status codes enable the server to indicate the status of the request to the client.

These status codes are categorized into 5 classes:

100 - 199: Informational responses

200 - 299: Successful responses

300 - 399: Redirection messages

400 - 499: Client Error responses

500 - 599: Server Error responses

Below is a list of the some of the HTTP status codes which we'll be using in this tutorial.

200 OK: Request has succeeded.

201 Created: Resource has been created. The response body should contain the new resource and the URL of the resource should be provided in the Location header.

204 No Content: Indicates that the response body has no content. Typically sent as a response status code for PUT and DELETE HTTP requests.

301 Moved Permanently: Indicates that the resource is now identified by a new URI. The response should contain the new URI as a best practice.

400 Bad Request: Indicates that there is something wrong with the request in terms of its format or input parameters and cannot be processed by the server. The client should modify the request before retrying.

401 Unauthorized: Indicates that the resource being requested is protected and requires authorization for being accessible. Repeating the request with proper authentication credentials may help in gaining access.

403 Forbidden: Indicates that the API understands the request but prohibits access to the resource. Authencation will not help gain access so request should not be repeated.

404 Not Found: Indicates that the requested URI cannot be mapped to an existing resource. The resource may exist at a later point in time so retrying the request is allowed.

405 Method Not Allowed: Indicates that the server recognizes the HTTP method but the target resource doesn't support it. The server must set the Allow header in the response.

500 Internal Server Error: This is a generic status code which indicates that an unexpected condition has occurred on the server and has halted the processing of the request. This code is used when no other 5XX code is found suitable. The client can retry the request.

For your reference, here is the full list of all the status codes.

Identification of Resources

You should aim to organize the design of your API around resources. So the first step in designing a REST API is identifying the resource entities and associating them with unique URIs.

A resource can either be a:

- Collection resource like projects, todo-lists or todos.

- Singleton resource like an individual project, todo-list or todo.

Let's look at how we can construct URIs for these resources.

URIs for collection resources can simply be:

/projects/todo-lists/todosURIs for singleton resources can be formed as:

/projects/{id}/todo-lists/{id}/todos/{id}Best Practice: Always try to use plural nouns for naming resources i.e

/projects,/todo-lists/{id}or/todos/{id}. Refrain from using single resource names like/project,/todo-list/{id}or/todo/{id}.

So how'd we design the URI to retrieve all todo-lists in a project?

/projects/{id}/todo-listsWhat about retrieving info about a todo in a particular todo-list?

/projects/{pId}/todo-lists/{tlId}/todos/{tId}For the Thunder Notes API, we need to identify just one resource for now i.e. notes. We'll use the following URIs for this resource:

/notes/notes/{id}We can match the URIs with the HTTP verbs and make a list of the API endpoints we're going to implement.

GET /notesPOST /notes

GET /notes/{id}PUT /notes/{id}PATCH /notes/{id}DELETE /notes/{id}Always use nouns to name resources and HTTP verbs to indicate the action to be performed on those resources. For example, avoid using URIs like

/notes/{id}/deletewhere the action to be performed is included in the URI and instead design this asDELETE /notes/{id}. It is best to rely on HTTP verbs to indicate the action to be performed on the resource.

By identifying our resources, we have successfully tackled a sub-constraint of the core REST constraint: Uniform Interface.

Setting up the Project

For the tutorial, we'll be using NodeJS and ExpressJS to implement our API server. Don't worry in case you're not familiar with these technologies and their syntax because our primary focus will be on learning how to design REST APIs. This approach will enable you to use the concepts taught in this article and apply the same using any language, framework or library of your choice.

This article assumes some basic familiarity with Git, Github and terminal commands. We'll also be using Postman for testing our API services. My preference is the Postman Desktop app but you can use the browser extensions also if you want.

Go to the tutorial Github repo and either clone or download it.

You'll see a few folders inside the repo folder but the one we're interested in right now is the starter-files folder.

The starter-files folder contains boilerplate code and serves as a basic skeleton upon which we can build our app and finally reach the version in the finished-files folder. The finished-files folder serves as a reference and contains the completed code for the entire tutorial.

Rename the starter-files folder to thunder-notes. We'll be working off of this folder for the majority of this tutorial.

Inside this newly renamed thunder-notes folder, you'll find two folders, one named client that will hold the API client/consumer codebase and the other one named server that will hold the API server codebase.

The client folder only has an index.html file for reference. In real-world applications, this folder will contain the application's front-end codebase and will be concerned with the user experience side of things along with communicating with the API server to perform CRUD operations on the data. But in this tutorial we'll use Postman to act like a mock client and make API requests on its behalf.

Our tutorial, right out of the box, enables the implementation of the Client-Server core REST constraint since it separates the application's presentation layer into the client folder and the business logic and database interactions into the API layer in the server folder.

Starting the API server

First off, rename the file .env.sample to .env. This file contains private and sensitive data like API keys, database passwords and other environment specific settings and is typically not committed into a git repo. Instead, a .env.sample file which contains dummy values for these settings is committed to serve as a template that can be used to generate the .env file later. For this tutorial, you can leave the values as is in the renamed .env file.

Now open command prompt and cd into the folder thunder-notes/server . Run npm install. This command will install the dependencies for our api server.

Once the installation is over, run npm start.

You'll get an output on the console that says:

👂 Listening on port 3000Awesome! This means that our API service is ready to serve requests at localhost:3000.

If you visit localhost:3000 in your browser, you'll be sending a GET request to the root API endpoint. You'll notice that the browser is unable to load anything. This is because there is no route handler definition for our root API endpoint.

Let's remedy that quickly. Paste this code into your handlers/rootHandler.js file.

exports.handleRoot = ( req, res ) => { res.json({ "message": "Welcome to the Thunder Notes API." });}Let's quickly go over what this route handler does. ExpressJS encapsulates information about the request like headers, payload, etc. into a request object and exposes various options for sending a response in a response object. Both these objects are passed automatically to the above route handler as input arguments and we reference them as req and res. The response object exposes the method json() which returns a JSON response to the client. The default status code returned is 200 OK.

Did you also notice that as soon as you saved your changes, the server restarted automatically. This is a pre-built configuration in the tutorial codebase so you don't have to manually restart the server every time you make a change.

If you visit localhost:3000 in your browser this time, you'll see the JSON response.

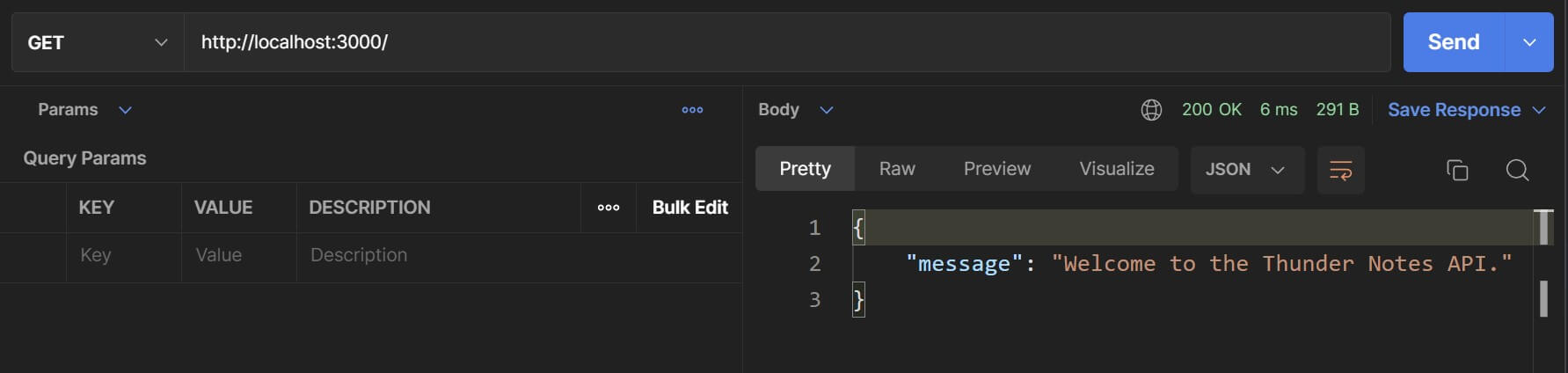

Let's also test this endpoint in the Postman app. Enter the URL to send the request to, which in this case is http://localhost:3000/ and hit the "Send" button and you should see the same response.

Please Note: You may be using the Postman browser extension so there may be a few differences in the UI but the basic usage will remain the same.

Congrats!🎉 Our API server is up and running and we're all set to get started with building the Thunder Notes API.

Exploring the starter files

Let's quickly walkthrough the files and the boilerplate code. I have added verbose comments about what these files and functions within them are used for so feel free to explore the code in them along with reading this article.

app.js: This file contains code for initializing our API server. This is NodeJS specific stuff so no need to worry if you don't understand this immediately but the comments should make it clear what each block of code does.

data/data.js: To keep things simple, we are not using an actual database for this tutorial. Instead we are simply using JavaScript objects. One for storing all the notes and another for storing all the users. Both these data sets are then exported as properties on a single object called data which is then used in all places where we need to access this data. As an analogy, if we'd have been using a relational database then these would have been the tables.

routes.js: This file contains the URI routes that our app will respond to. We have discussed these in the previous section and you'll notice that we are calling methods on the router object called get(), post(), put(), patch() and delete() which are exposed by ExpressJS. These methods correspond to the HTTP verbs we have discussed.

We specify the URI slug as the first argument to these methods and then pass in a route handler function as the second argument. This function is invoked whenever a request for the corresponding "URI slug-HTTP verb" combination comes in.

We define these functions within handlers/noteHandlers.js and handlers/rootHandler.js. The purpose of these files is to implement the routing logic for API endpoints. If you look at these two files right now you'll see that the definitions are missing. That is intentional and we are going to fill in those blanks as we progress with this tutorial.

controllers/notesController.js and controllers/usersController.js: The purpose of these files is to implement business-logic as well as communicate with the database which in this case is our data object exported by data/data.js.

controllers/notesController.js exposes methods that perform CRUD operations on data.notes. These operations are implemented while keeping immutability in mind.

controllers/usersController.js exposes methods that deal with fetching user information.

Most of our API endpoints will only interact with the notes resource. The concept of users will come into play only for learning about user authentication in REST APIs. This is why I have intentionally skipped operations like user registration, user profile udpates or user deletion because these operations will be similar to what we'll learn for the notes resource and I don't want to make this tutorial any longer than it already is or needs to be.

Also, the reason I have included these controller functions in the starter files is so that you don't need to worry about how the data is being stored which is an implementation detail and not directly related to REST API design.

package.json and package.lock.json contain metadata about our API server as a NodeJS project, NPM dependencies and scripts to initialize our server.

Great🙌! Now that we have a good idea about the code included in the starter files, let's proceed with implementing the routes we had discussed in the previous section.

GET

You might already be familiar with the GET HTTP verb but let's look into its usage in the context of REST APIs.

The

GETmethod requests a representation of the specified resource. Requests usingGETshould only retrieve data. - MDN Docs

In the previous section, we've already seen how to handle GET requests on the root endpoint using router.get().

Now we'll handle GET requests on the notes resource endpoints i.e. /notes and /notes/{id}.

Open the noteHandlers.js file and import the notesController.js file at the very top using this statement.

const notesCtrl = require( "../controllers/notesController" );After this, add the below route handler function definition to getNotes() inside noteHandlers.js.

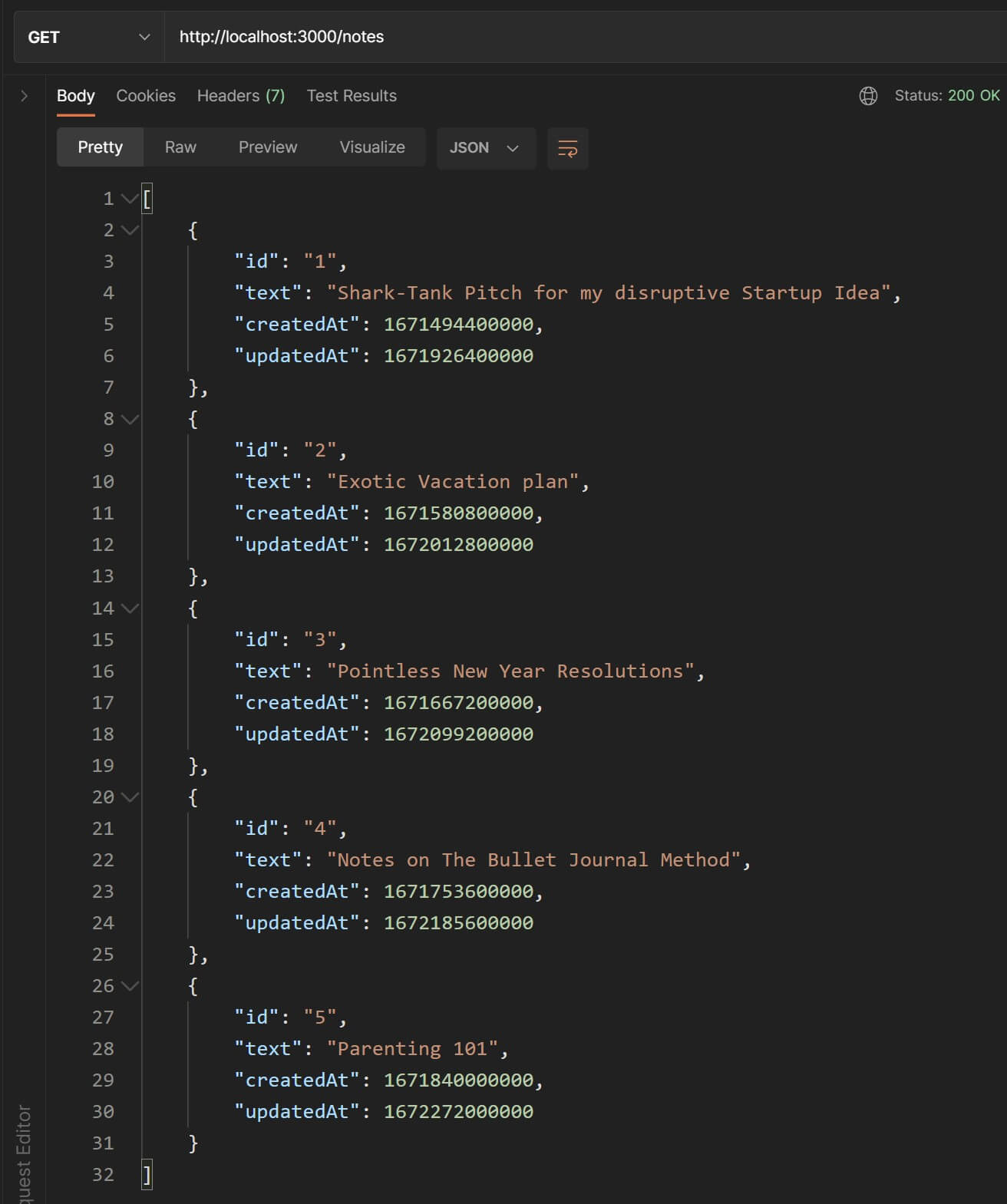

exports.getNotes = ( req, res ) => { // get all the notes in the database const notes = notesCtrl.getAllNotes(); // send a `200 OK` response with the note objects in the response payload res.json( notes );}So what we're doing here is using the controller function getAllNotes() to retrieve a collection of all the note objects and then returning a JSON representation of this payload in the response.

Let's check the response in Postman. Enter the url as http://localhost:3000/notes and send a GET request. You should see a response as shown below:

Awesome🤘! Our GET /notes endpoint is working.

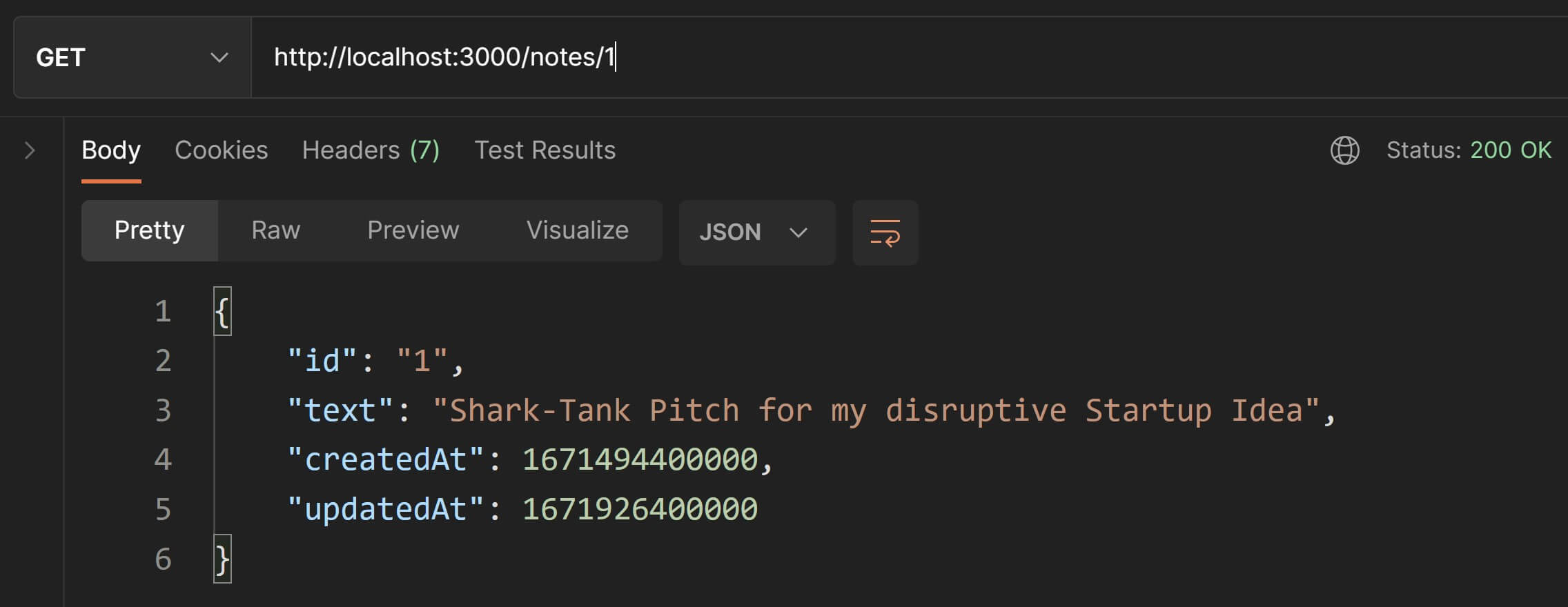

Now for handling GET requests on the /notes/{id} endpoint, use the below route handler function definition for getNote().

exports.getNote = ( req, res ) => { // {id} value from the URI is stored in `req.params.id`. const note = notesCtrl.getNoteById( req.params.id ); // send a `200 OK` status code with the note object in the response payload res.json( note );}ExpressJS takes the dynamic :id value from the URI and stores it in req.params with the same name i.e. req.params.id. We pass this in to a different controller function this time, getNoteById() which accepts a single ID and returns a single note object.

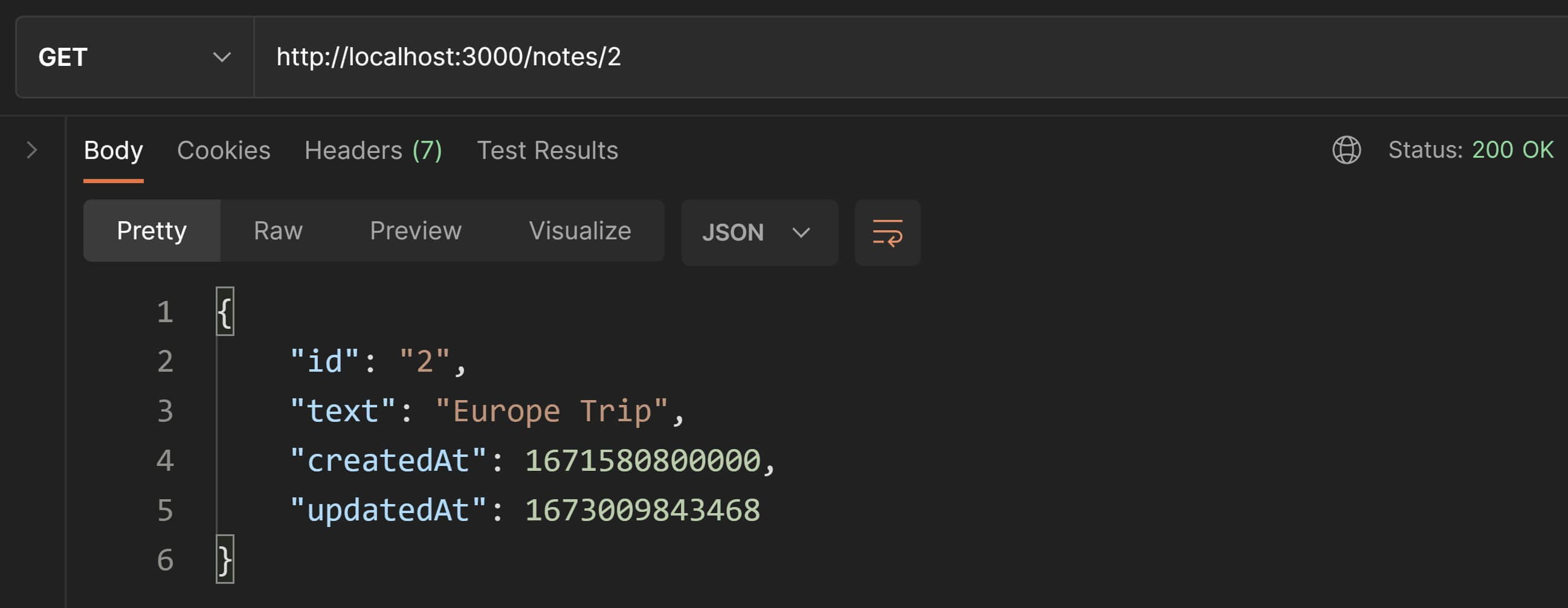

Let's test this out in Postman. Enter the URL as http://localhost:3000/notes/1 where 1 is the ID of the first note object in data.js.

Wonderful🌟! Our endpoint for fetching a single note is also working.

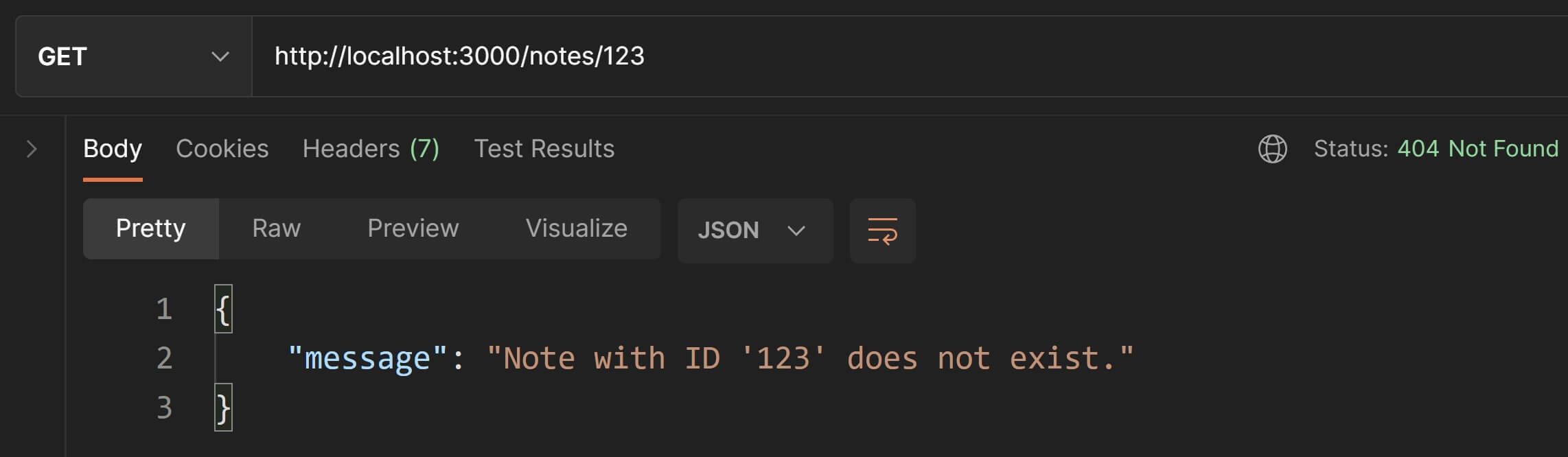

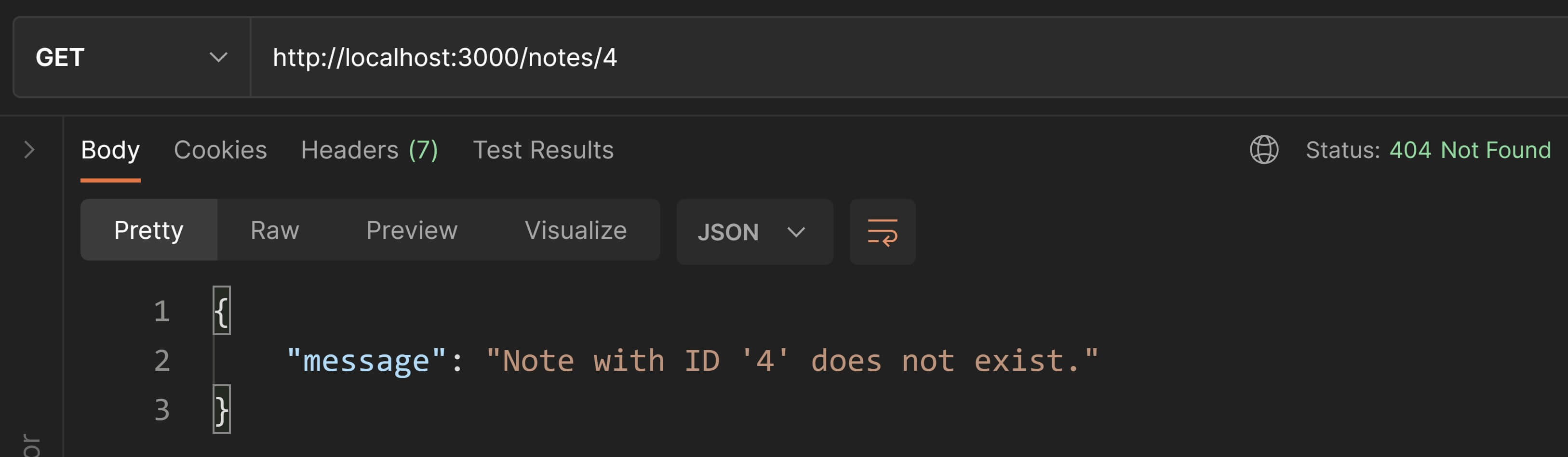

But what if the input :id value doesn't correspond to an existing note. In that case, we must return a 404 Not Found status code.

Copy-paste this new function definition for the getNote() handler to implement this validation.

exports.getNote = ( req, res ) => { // {id} value from the URI is stored in `req.params.id`. const note = notesCtrl.getNoteById( req.params.id );

// if the note does not exist then throw `404 Not Found`. const noteExists = typeof note !== "undefined"; if( !noteExists ) { res.status( 404 ).json({ "message": `Note with ID '${req.params.id}' does not exist.` }); return; }

// send a `200 OK` status code with the note object in the response payload res.json( note );}Notice that while sending the 404 Not Found response, we explicitly call status() because we don't want to send the default 200 OK status code.

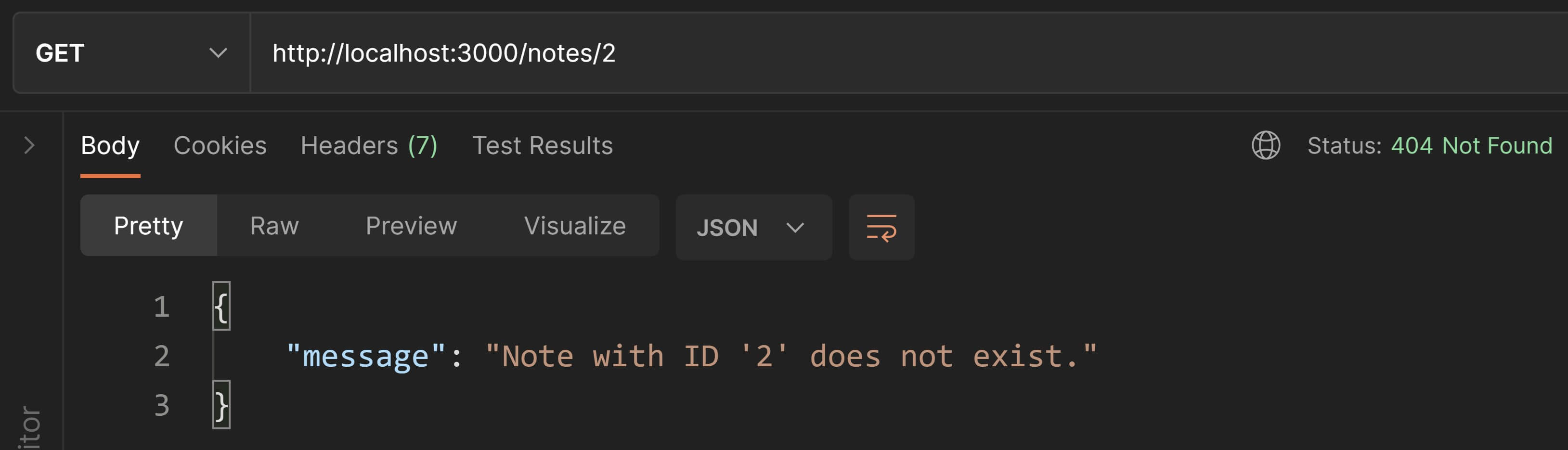

Back in Postman, let's test this validation by using a non-existent ID like 123.

Done✅! We have successfully implemented the GET endpoints for the Thunder Notes API.

Also notice that these GET endpoints perform a read-only operation and do not change the server state. This is why GET requests are considered safe which implies that they are also idempotent.

POST

Generally, POST is used to send a payload of data along with the HTTP request. So technically, you can use POST for creating or updating resources.

But as a convention in REST APIs, POST is used only for creating a new resource.

This also implies that POST is typically used on collection resources and not on singleton resources because URIs of singleton resources require an {id} like /notes/{id} but the client does not have the ID of a resource before it has been created on the server.

This means that for this tutorial, we'd only need to implement one POST endpoint for our /notes collection resource as:

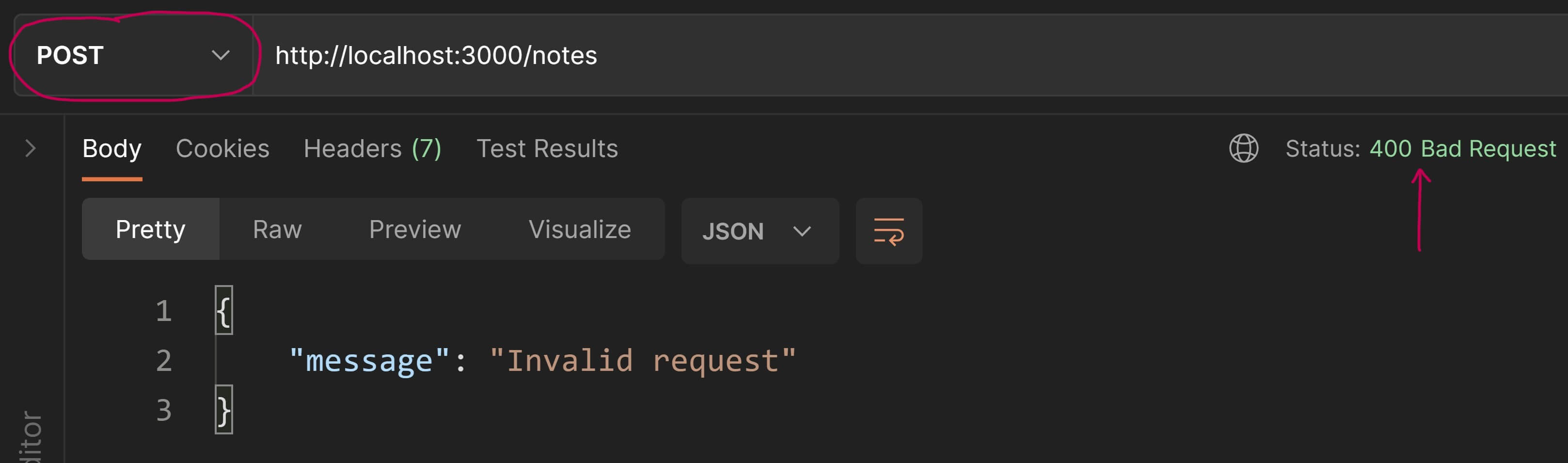

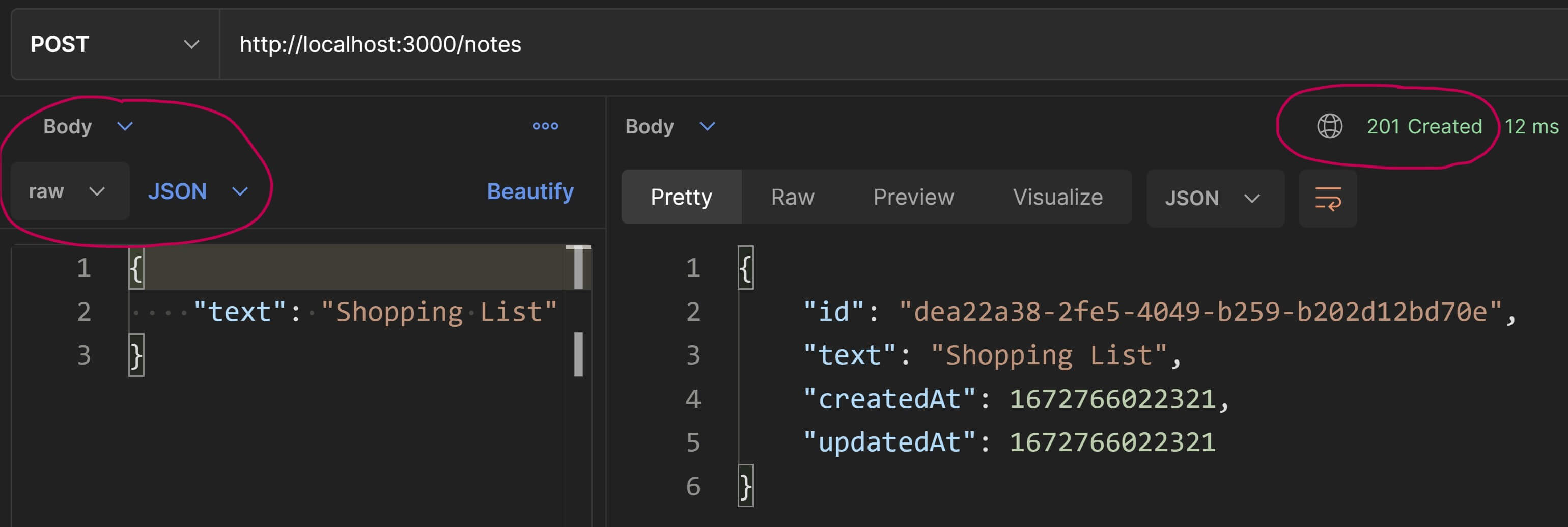

POST /notesLet's define the handler function for this route in noteHandlers.js.

exports.createNote = ( req, res ) => { // ExpressJS extracts the note's `text` from the request body and stores // it in `req.body`. If no `text` is provided, return `400 Bad Request`. if( !( "text" in req.body ) ) { return res.status( 400 ).json({ "message": "Invalid request" }); } const { text } = req.body; // create the new note // The first argument in the controller function `createNote()` is `userId`. // We'll deal with user authentication later so for now, // just use a hardcoded value of "1" for userId. // "1" happens to be the ID of a pre-defined user in "data/data.js". const newNote = notesCtrl.createNote( "1", text );

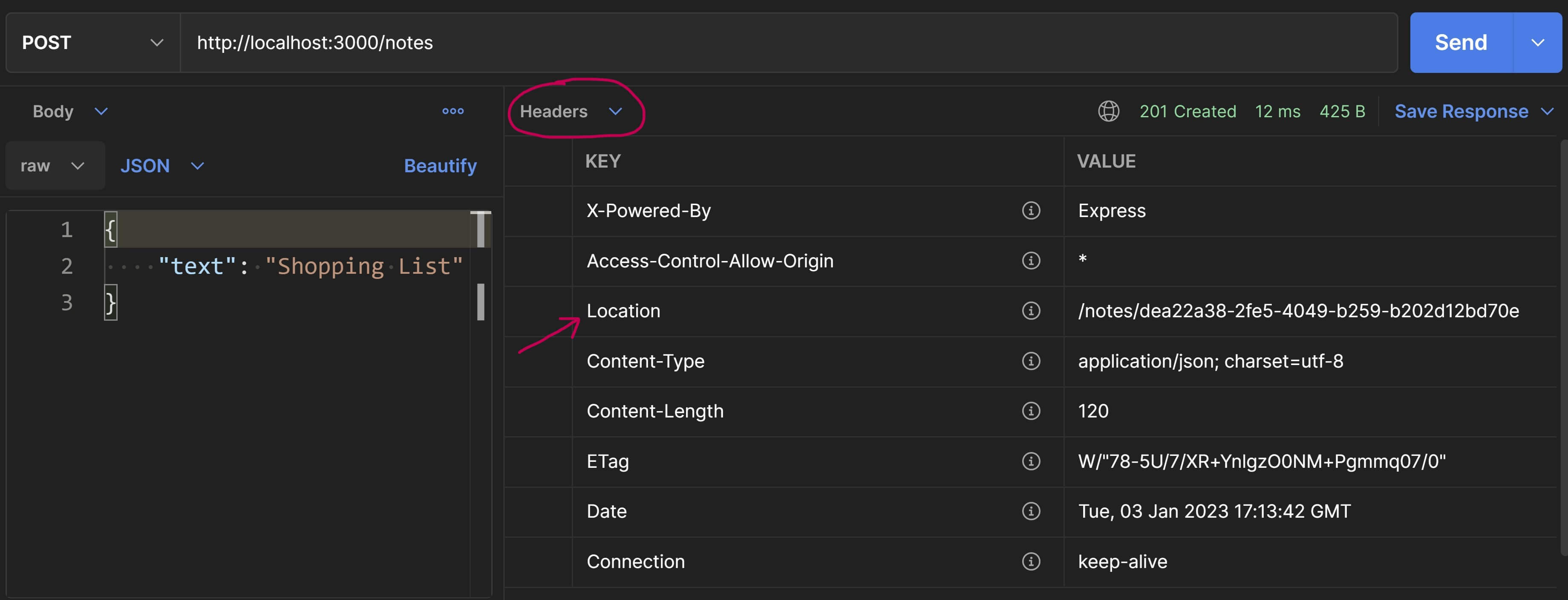

// Add the new note URI in the `Location` header as per convention const newNoteURI = `/notes/${ newNote.id }`; res.setHeader( "Location", newNoteURI ); // send success response to the client with status code `201 Created`. // Also include the new note object in the response payload. res.status( 201 ).json( newNote );}Let's test this out in Postman. We'll first send a POST request without a body so that we can test the 400 Bad Request validation.

Great👍! The validation works.

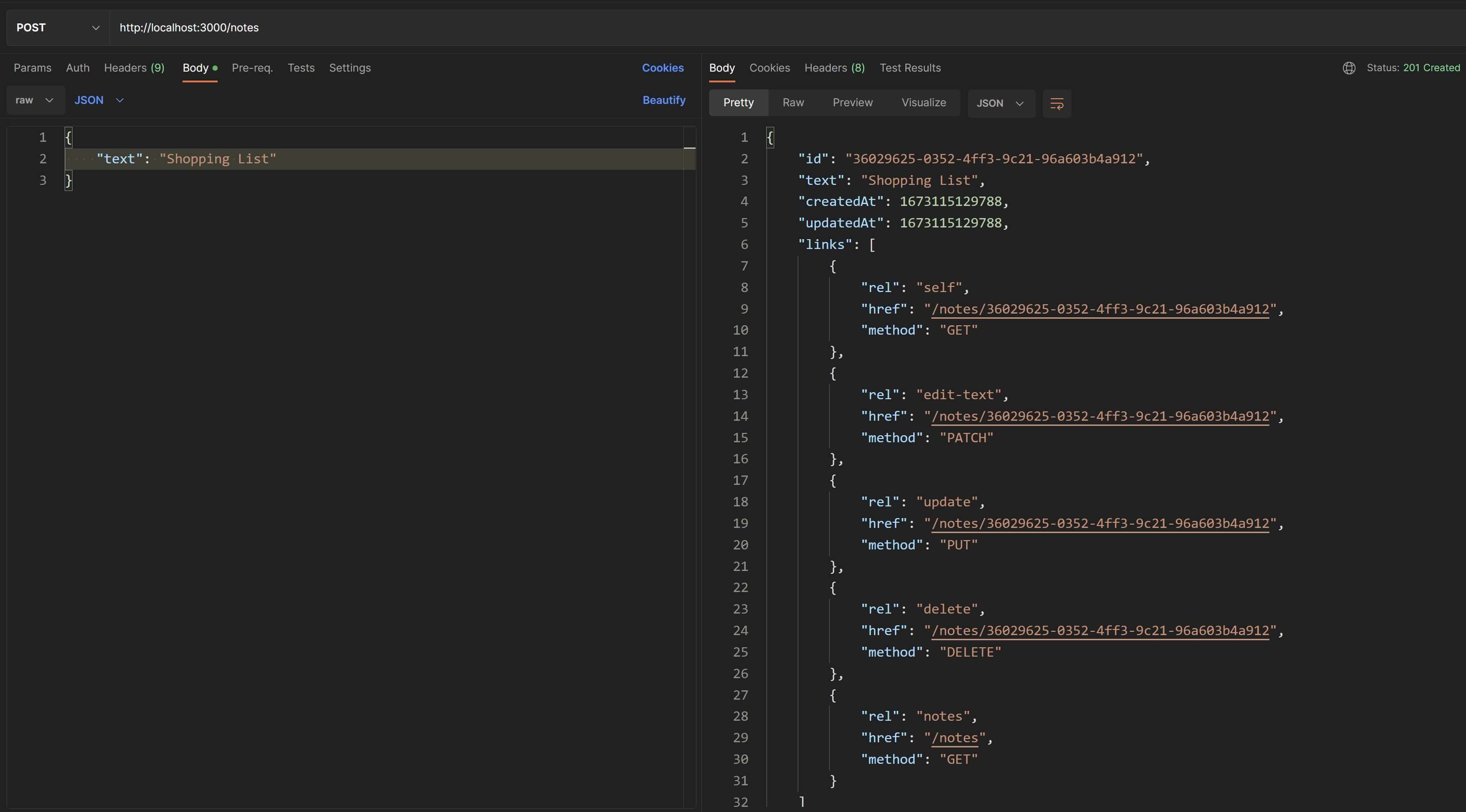

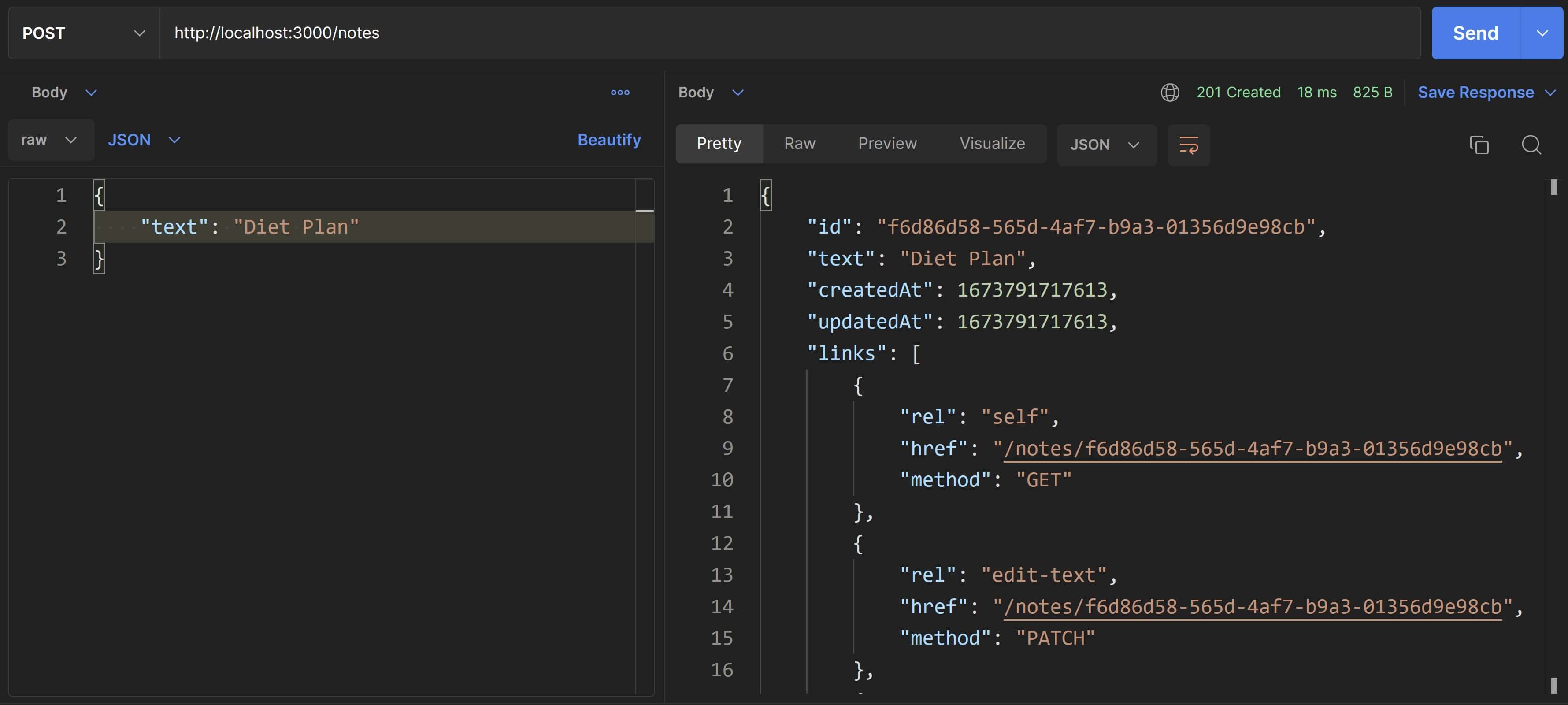

Let's try adding a new note. This time, we'll need to send a payload along with the request body. To do that in Postman, select the "Body" tab in the Request pane, select the type of data as "raw" and sub-type as "JSON". Specify a JSON with a single property text as shown in the screenshot below and you'll receive a 201 Created response with the note object in the body.

Also, if you switch to the "Headers" section in the Response pane in Postman, you'll see the URI of this new note in the Location header and you can use that to fetch the new note's detailed info.

Done✅! We have successfully implemented the POST endpoint for the Thunder Notes API.

Since POST requests create a new resource, they change the server state and so are not safe. Also, multiple identical POST requests will end up creating the same resource multiple times so they are also not considered idempotent.

PUT

PUT is also used to send data to the server in the form of a payload in the request body and this data can technically be used for creating or updating a resource.

But as a convention, we use PUT to update a resource on the server and not to create one.

With PUT, the client sends the entire resource representation in the request body and the server replaces the existing representation with this new one. This is why PUT is typically used on singleton resources like /notes/{id} and not on collection resource like /notes because it is rare that an entire collection will be replaced by what has been sent in the request body.

For example, in our tutorial, the resource representation of a note object is:

{ id: "2", text: "Exotic Vacation plan", createdAt: 1671580800000, updatedAt: 1672012800000 }Suppose the client wants to update this note, say for example, change the text from "Exotic Vacation Plan" to "Europe Trip". In order to perform this update with a PUT request, the client will update the text and updatedAt fields in the note object and then include the entire updated note object as JSON in the request body.

But suppose the client only sends the updated text field as JSON like this:

PUT /notes/{id}

{ "text": "Exotic Vacation plan"}Then the server will replace the existing note object with this one meaning the new note object will not have the fields id, createdAt and updatedAt resulting in data loss.

This is why when using PUT, clients must remember to first fetch the resource representation via GET, modify the required fields and then send the entire resource representation in a PUT request.

We'll implement two endpoints for PUT requests in the Thunder Notes API. One will be for the collection resource /notes which will serve as an example of how to disallow certain HTTP verbs on selected resources. The other will be for /notes/{id} which will update the entire note object.

PUT /notes // throw 405 Method Not AllowedPUT /notes/{id} // replace the resourceLet's define these routes in our app.

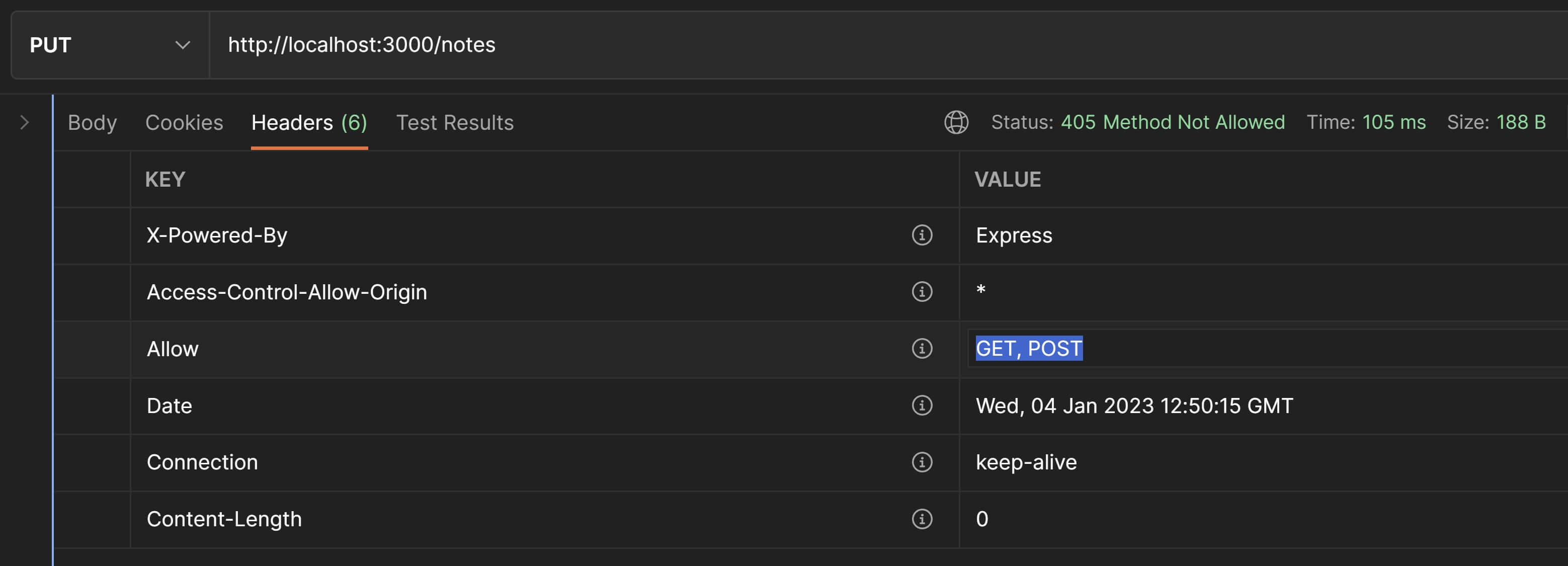

PUT /notes - Allow Header and 405 Method Not Allowed

We'll first handle the invalid route meaning PUT on the /notes collection.

We already have a route defined for this in routes.js like this:

router.put( "/notes", noteHandlers.handleInvalidRoute );Head over to the rootHandler.js file and add the below function definition to the handleInvalidRoute() route handler.

exports.handleInvalidRoute = ( req, res ) => { // set `Allow` header to indicate which HTTP methods are allowed for this resource res.setHeader( "Allow", "GET, POST" );

// return 405 Method Not Allowed res.status( 405 ).send();}Back in Postman, let's set the request method to PUT and the URI to http://localhost:3000/notes and hit Send. You'll see the Allow header in the response like in the screenshot below:

Fantastic😎! We now have a mechanism to inform API clients that the /notes collection only serves GET and POST requests and disallow all other HTTP verbs.

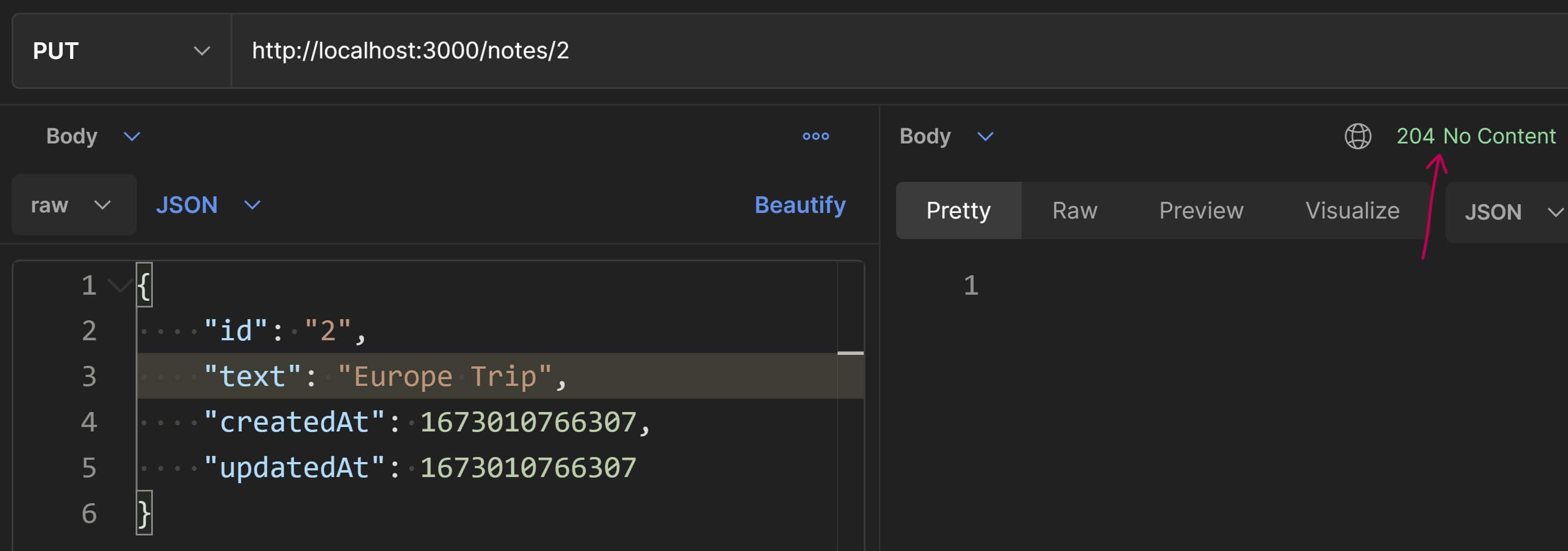

PUT /notes/{id}

Now we'll look at how to handle PUT requests on /notes/{id}. Use the below function definition for the updateNote() route handler function.

exports.updateNote = ( req, res ) => { // Request body should contain the complete representation of a note resource // If it doesn't, then return a `400 Bad Request` error. if( !( "id" in req.body ) || !( "text" in req.body ) || !( "createdAt" in req.body ) || !( "updatedAt" in req.body ) ) { return res.status( 400 ).json({ "message": "Invalid request" }); }

// update the note const { id, text, createdAt, updatedAt } = req.body; notesCtrl.updateNote( id, text, createdAt, updatedAt );

// send success response to the client res.status( 204 ).send();}The controller function updateNote() replaces the existing note resource representation with the new one.

If the update was successful, then we can:

- either send a

200 OKstatus code along with the updated note in the response body or - send a

204 No Contentstatus code with no response body. I have chosen to use this one for our example above.

Let's test this in Postman. Request method still set to PUT, enter the URI as http://localhost:3000/notes/2 and in the request body, enter the JSON given below.

{ id: "2", text: "Europe Trip", createdAt: 1673010766307, updatedAt: 1673010766307 }This is the updated representation of the note with id 2. We have changed the text from "Exotic Vacation Plan" to "Europe Trip". Also, just to drive this concept home that the entire resource representation is updated, we've also updated the createdAt and updatedAt timestamps. If you hit Send, you'll get the response as shown below:

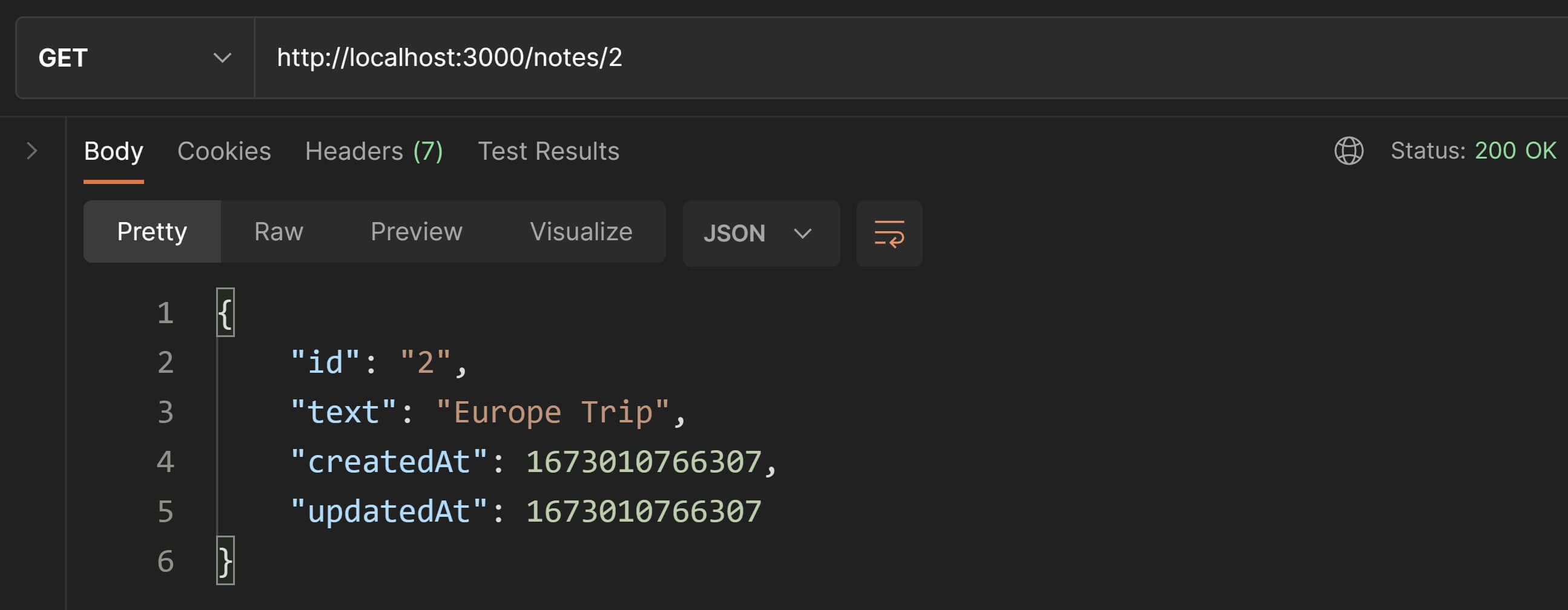

We can confirm that the note was updated by invoking another GET request to the same URI.

Done✅! Our PUT endpoints are working.

But what if the {id} in the URI does not correspond to an existing note like for example: /notes/123? In that case we need to validate the note's existence in the same way we did for the getNote() route handler.

Since this validation is going to be used by multiple route handlers in this tutorial, we'll refactor it into a re-usable function using middlewares.

Organize validation logic using Middlewares

Visualize an assembly line where the parts of a machine to be assembled move along the conveyer belt and at each checkpoint, some kind of part is fitted, removed, etc.

ExpressJS uses the concept of middlewares in a similar way. Middlwares are functions with access to the request object(req) and the response object(res). The request is routed through a chain of middleware functions(checkpoints). Each of these functions perform some kind of processing on the request or the response object. Once they are done, they invoke the next middlware or route handler function from the chain.

In our case, we'll implement a middleware which will check whether the {id} being requested for a single note resource i.e. /notes/{id}, exists or not. If it doesn't exist, we'll return a 404 Not Found. If it does exist, the middleware will invoke the route handler function.

First up, create a directory called middlewares inside the server folder. Create a file in it called noteValidation.js. Use the below code and save it in this new file.

const notesCtrl = require( "../controllers/notesController" );

/* This middleware function will perform some common validation steps for single "note" resources such as - Making sure the note exists else throw `404 Not Found`.

If all is well, then it will store the entire note object in `res.locals` and make it available for future middlewares.*/module.exports = function validateNote( req, res, next ) { // find the note with the input note ID in the database. // if the note does not exist, `undefined` will be returned. const note = notesCtrl.getNoteById( req.params.id ), noteExists = typeof note !== "undefined"; // if the note does not exist, then throw `404 Not Found`. if( !noteExists ) { res.status( 404 ).json({ "message": `Note with ID '${ req.params.id }' does not exist.` }); return; }

// if validation is successful, store the note for future use // in `res.locals.note` and call the next middleware. res.locals.note = note;

// invokes the next middleware next();}Did you notice that we are storing the matching note object in res.locals.note? This is so that subsequent middlewares can use this note object and do not have to re-fetch it from the database.

To use this middleware, open routes.js and import the new middleware at the top.

const validateNote = require( "./middlewares/noteValidation" );Now add this new middleware before the route handler functions of all singleton /notes/{id} resource routes.

// singleton `note` endpointsrouter.get( "/notes/:id", validateNote, noteHandlers.getNote );router.put( "/notes/:id", validateNote, noteHandlers.updateNote );router.patch( "/notes/:id", validateNote, noteHandlers.editText );router.delete( "/notes/:id", validateNote, noteHandlers.deleteNote );We can now remove the validation from the getNote() route handler so that the new definition is much more compact.

exports.getNote = ( req, res ) => { // Use the `res.locals` object to get the note provided by the // `noteValidation` middleware. res.json( res.locals.note );}Let's test everthing we have implemented so far. I'll leave the testing for the getNote() handler function to you since we've already covered that. We've also covered testing the happy-path scenario for PUT.

So now, we're only going to test whether this new middleware throws a 404 Not Found for invalid PUT requests or not.

Woo Hoo🎉! The middleware works as expected and intercepts the PUT request to the invalid note with ID 123 and throws a 404 Not Found.

Now back to PUT.

Since PUT updates a note resource, it modifies the state of the server which makes it not safe BUT it is idempotent. This is because it'll have the same effect on the server irrespective of the number of identical requests the client makes. For example, consider our previous Postman test for PUT /notes/2 with the new resource representation in the request body. Even if you invoke it multiple times, the note object will always be replaced by the same representation from the request body.

Does anybody ever use PUT?

If you're wondering that replacing entire resource representations is a rare scenario and will almost never be used, you may be right but there are scenarios where PUT becomes the obvious choice. Let's look at a real-life implementation of PUT with Github's Lock an Issue API service.

PUT /repos/{owner}/{repo}/issues/{issue_number}/lock

{ "lock_reason":"off-topic"}Here, lock is a singleton resource. The JSON payload in the above request is actually the complete representation for the lock resource and it is replaced completely everytime this API service is invoked.

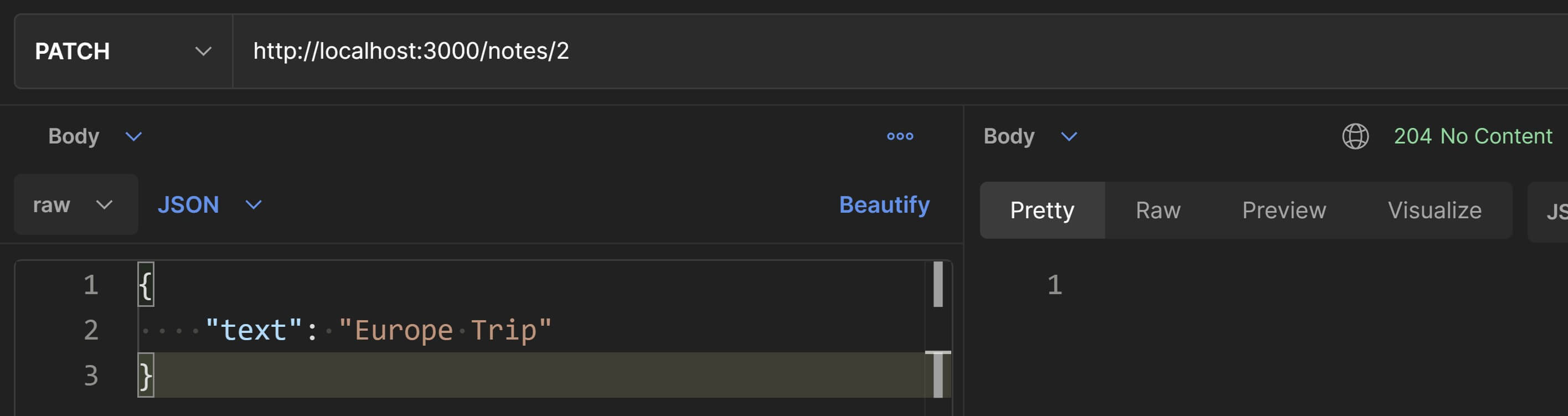

PATCH

The PATCH HTTP verb is also used to update a resource but unlike PUT which updates the resource in its entirety, PATCH only updates it partially.

So instead of sending the entire resource reprensentation, the client only needs to send the fields that need to be updated in the request body. The ID of the resource that needs to be updated is sent in the URI just like in a PUT request.

Similar to PUT, PATCH is also conventionally used only on singleton resources like /notes/{id} and not on collection resource like /notes.

We'll implement a single endpoint for PATCH in our Thunder Notes API.

PATCH /notes/{id}This endpoint will be used to edit the note's text only.

routes.js already has a route defined for this:

router.patch( "/notes/:id", validateNote, noteHandlers.editText );Let's define the corresponding route handler function: editText()

exports.editText = ( req, res ) => { // throw `400 Bad Request` if `text` is not provided in the request payload. if( !( "text" in req.body ) ) { return res.status( 400 ).json({ "message": "Invalid request" }); }

// everything seems fine, let's update the note text. notesCtrl.editText( res.locals.note, req.body.text );

// send success response to the client. No response body required. res.status( 204 ).send();}The validation that returns a 400 Bad Request is the same one we tested for POST so I'm not going to test that again but you can check that for yourself.

Also, you'll notice we return a 204 No Content with no response payload on success but you can also send a 200 OK with the updated resource in the response payload.

We'll now test the happy-path scenario i.e. editing the note's text. Let's make a PATCH request in Postman and test this out. We'll use the same URI and request body as the example from the previous PUT section.

Change the request method to GET to confirm whether the edit was performed or not. Notice that the updatedAt timestamp has also been updated. That happens automatically in the editText() controller function.

Booyah🥳! Our PATCH request is working as expected.

Since PATCH updates the state of the server, it is not safe. But PATCH is technically also not idempotent. Let's see why that is the case in a little more detail.

The proper way to PATCH

The way we have sent and handled PATCH in our example is easy and practical but not the proper way to do it.

PATCH can be used to do much more than just partially update values in the resource. It can technically be used to add or remove or even move a resource.

It can do all this when the client sends a list of operations that need to be performed in the request body as a JSON Patch Document. Don't get scared with that terminology. It is just an array of objects with each object representing an operation that should be performed on the specified resource on the server.

It looks something like this:

[ { "op": "test", "path": "/a/b/c", "value": "foo" }, { "op": "remove", "path": "/a/b/c" }, { "op": "add", "path": "/a/b/c", "value": [ "foo", "bar" ] }, { "op": "replace", "path": "/a/b/c", "value": 42 }, { "op": "move", "from": "/a/b/c", "path": "/a/b/d" }, { "op": "copy", "from": "/a/b/d", "path": "/a/b/e" }]So we send this list of operations in the PATCH request body and the server performs each operation in sequence.

The possibility of performing operations like these makes PATCH a non-idempotent method.

So should we use PATCH in the easy way or the not-so-easy proper way?

It depends on your personal preference or project requirements.

For example, Netflix's Genie REST API uses PATCH the proper way with the list of operations in the request body.

The Github API and the WIX API on the other hand use the simpler approach using PATCH to partially update the resource.

In my opinion, the easy way seems fine for most scenarios.

We can also skip using PATCH all together and just use PUT to update the entire resource.

Whatever you do, don't use PUT to make partial updates because remember that PUT is idempotent and PATCH is non-idempotent.

For more information on how to properly use PATCH, read Please. Don't Patch Like That.

DELETE

The DELETE HTTP method is used to delete a resource on the server.

DELETE is typically used on singleton resources and not on collection resources since deleting an entire collection of resources is a rare scenario.

The client only specifies the ID of the resource to be deleted in the URI. A payload is usually not required in the request body.

We'll implement a single DELETE endpoint for our Thunder Notes API.

DELETE /notes/{id}routes.js already has a route defined for this:

router.delete( "/notes/:id", validateNote, noteHandlers.deleteNote );Let's define the corresponding handler function: deleteNote()

exports.deleteNote = ( req, res ) => { // delete the note from the database // The first argument in the controller function `deleteNote()` is `userId`. // We'll deal with user authentication later so for now, // just use a hardcoded value of "1" for userId. // "1" happens to be the ID of a pre-defined user in "data/data.js". notesCtrl.deleteNote( "1", res.locals.note );

// send success response to the client res.status( 204 ).send();}Here also, we're sending a 204 No Content with no response payload on success but you can also send a 200 OK with the deleted resource in the response payload if needed.

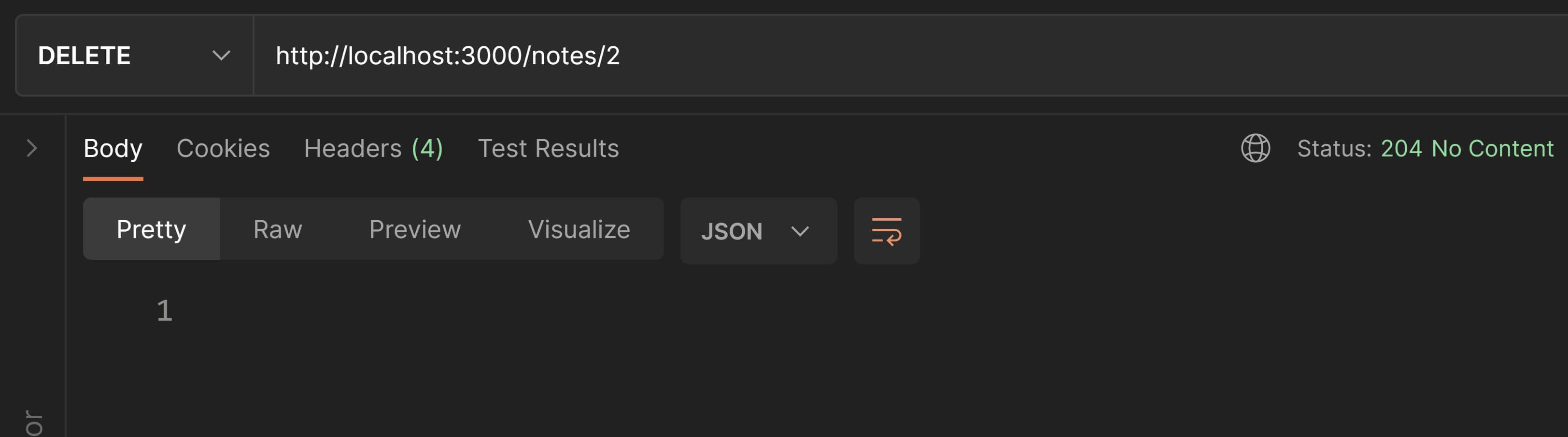

Let's test this in Postman. Set the request method to DELETE and the URI to http://localhost:3000/notes/2 and hit Send.

Now to confirm whether the delete worked, change the request method to GET and hit Send. You should receive a 404 Not Found which confirms that the note has been deleted.

Since DELETE updates the state of the server, it is not safe BUT, it is idempotent.

How can DELETE be idempotent since the first time we call it, it'll delete the resource and return a 204 No Content but subsequent DELETE calls with the same ID will return a 404 Not Found since the resource has already been deleted. That doesn't seem like being idempotent, does it? 🤔

Remember that idempotency doesn't depend on the HTTP status code being returned but refers to the state of the system after the request has been processed completely. When the 1st DELETE operation completes, the resource doesn't exist on the system. Subsequent DELETE invocations on the same resource don't change the state and the resource still doesn't exist. So the state of the server remains the same even after multiple identical DELETE calls.

Done✅! We've successfully implemented the DELETE endpoint for our Thunder Notes API.

To recap, we'd already covered the sub-constraint, Identification of Resources which falls under the Uniform Resource core REST constraint. Now we've also seen how to send complete resource representations in the responses of our API services along with proper headers set and how to manipulate resources through their representations in our PUT and POST calls. This means we've successfully implemented two more sub-constraints namely Self-descriptive messages and Manipulation of resources through representations.

We'll now have a look at how to implement the last remaining sub-constraint which is HATEOAS.

Hypermedia As The Engine Of Application State(HATEOAS)

HATEOAS stands for Hypermedia As The Engine Of Application State. This is also a sub-constraint categorized under the Uniform Interface core REST constraint.

It's a feature that enables clients to browse your API in much the same way as you'd browse a web page. When you visit a web page, you read the page content which also has relevant links to other web pages that provide more information about a specific topic. In much the same way, HATEOAS enabled APIs provide relevant links to other API endpoints in the response body, that allow clients to navigate the API.

I have explained the concept behind HATEOAS in detail in the previous article in this series. In this article, we'll look at how we can actually implement HATEOAS in a REST API.

From a high-level, the implementation will deal with the inclusion of a property called links for each resource within the API response.

HATEOAS for a singleton resource

Consider the example of an e-commerce shopping app where we fetch details about a product.

GET /products/21A sample response from the above request would look something like this:

{ "product_id": 21, "name": "Dell XPS 7590" "price": "₹150000", "category": "Laptop", "in_stock": true, ... ...}Let's make this response HATEOAS-compliant.

{ "product_id": 21, "name": "Dell XPS 7590" "price": "₹150000", "category": "Laptop", "in_stock": true, ... ... "links": [ { "rel": "self", "href": "/products/21", "method": "get" }, { "rel": "reviews", "href": "/products/21/reviews", "method": "get" }, { "rel": "add-to-cart", "href": "/users/112/cart/items", "method": "post" }, { "rel": "add-to-favs", "href": "/users/112/favorites", "method": "post" }, { "rel": "add-review", "href": "/products/112/reviews", "method": "post" } ]}Apart from the usual data fields, we have a links property which is an array of objects that contains relative URIs. Each URI either indicates other API endpoints the client can invoke to fetch more information related to the current resource or further actions a client is allowed to perform on the current resource. For example, the client can fetch reviews for the product using rel: "reviews" or the client can perform an action like add the product to cart using rel: "add-to-cart".

The rel property stands for relation and indicates the relation between the resource in the response and the URI link. The href property specifies the URI slug and the method property specifies the corresponding HTTP verb. A rel value of "self" represents the URI slug of the current resource.

So while anchor tags and links on web pages enable humans to browse web pages, this links property enables client applications to browse APIs.

What's more, with HATEOAS, the API can control what links and actions are available to the user dynamically. For example, if the product is not in stock, the user should not be able to add the product to the cart. In that case, the API can simply omit the rel: "add-to-cart" link from the links array in the response.

{ "product_id": 21, "name": "Dell XPS 7590" "price": "₹150000", "category": "Laptop", "in_stock": false, ... ... "links": [ { "rel": "self", "href": "/products/21", "method": "get" }, { "rel": "reviews", "href": "/products/21/reviews", "method": "get" }, { "rel": "add-to-favs", "href": "/users/112/favorites", "method": "post" }, { "rel": "add-review", "href": "/products/112/reviews", "method": "post" } ]}The client can read this response and implement the UI accordingly and not expose the ADD TO CART option to the end-user.

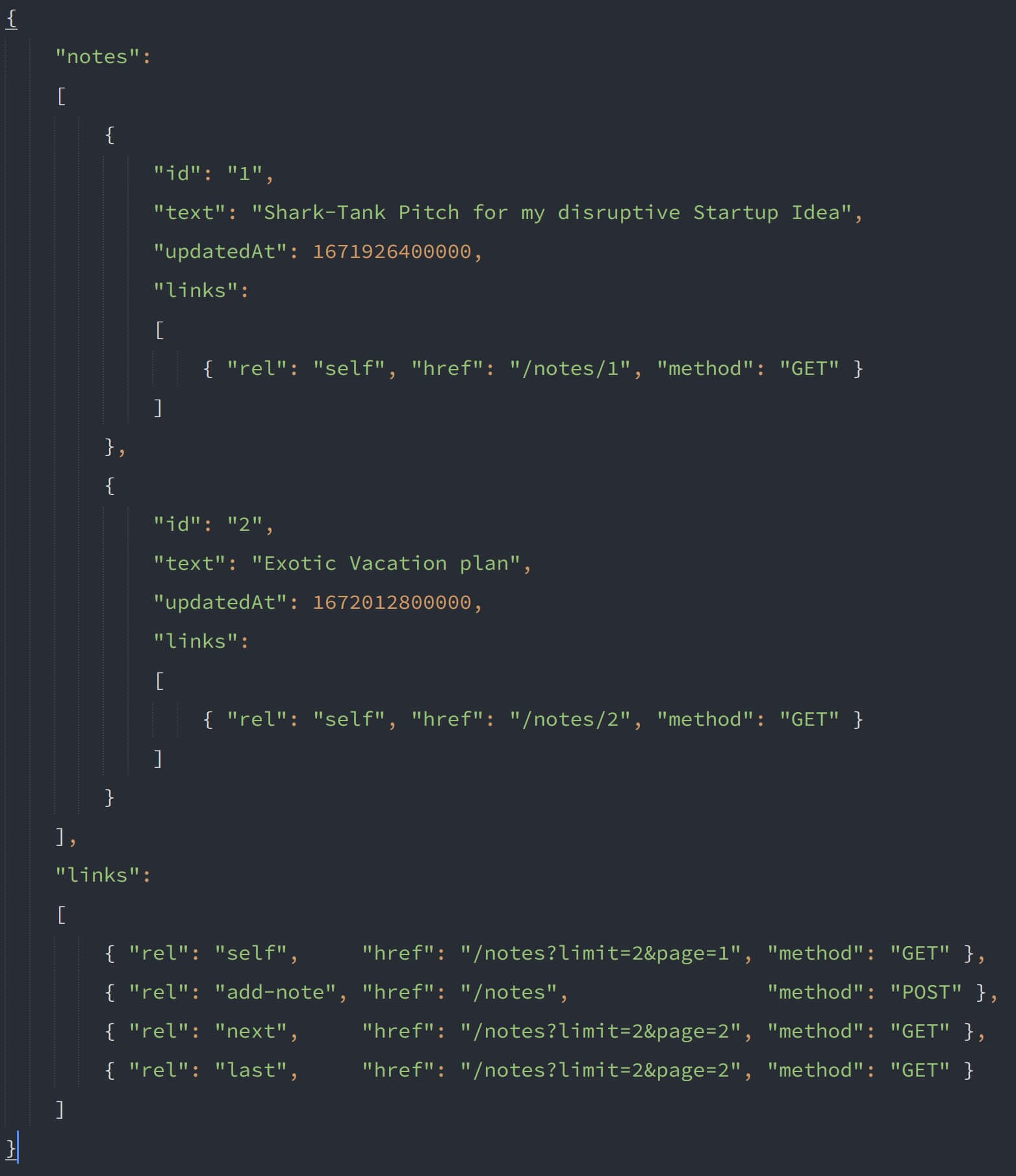

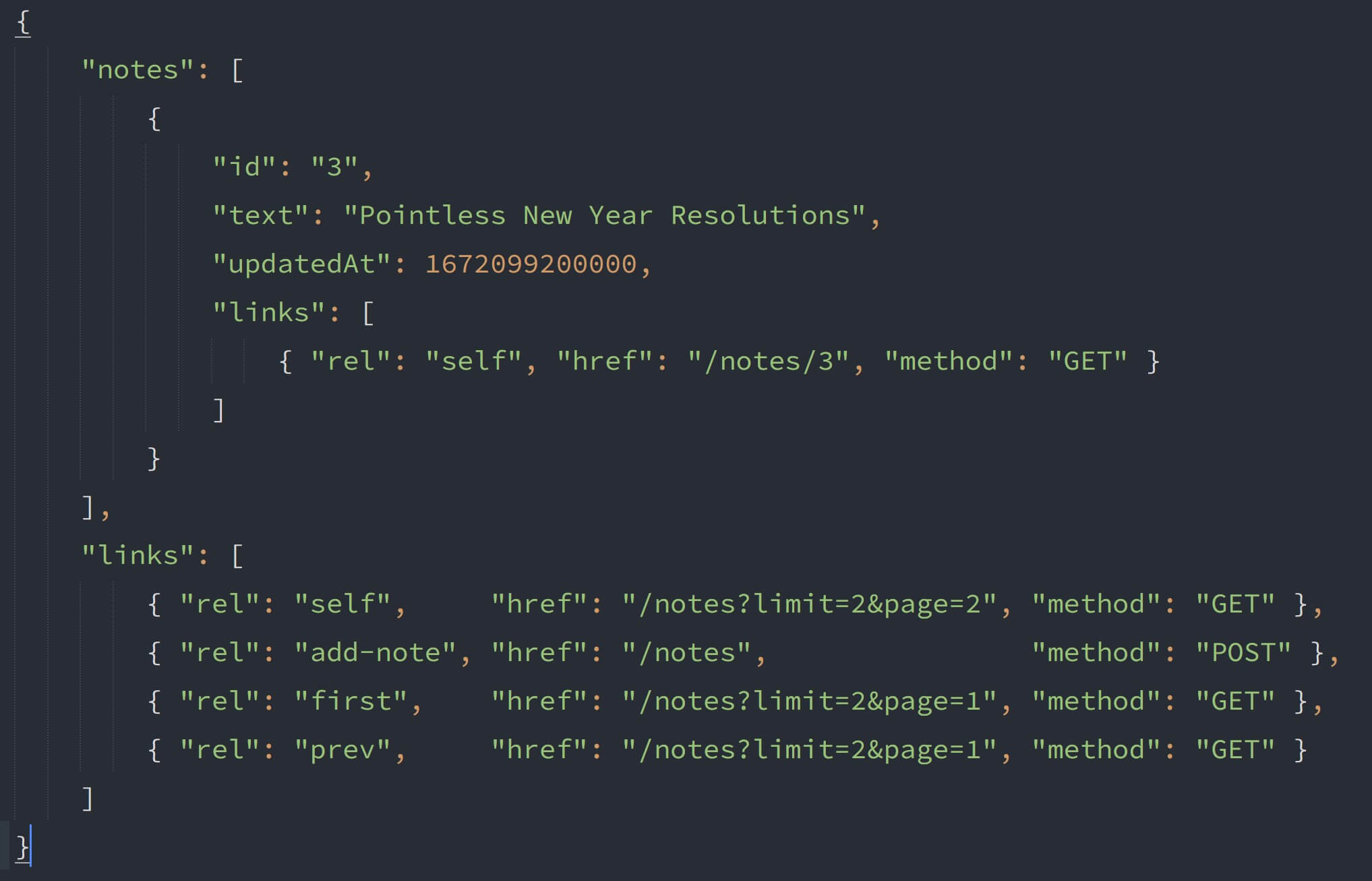

HATEOAS for a collection resource

Let's analyze a HATEOAS-compliant response for a collection resouce.

In continuation of the previous example, let's suppose the client invokes the rel: "reviews" URI to retrieve all the reviews for a product.

GET /products/21/reviewsLet's see how a HATEOAS-enabled response for the above request might look like.

{ "reviews": [ { "review_id": 35, "user_id": 84, "rating": 5, "content": "Awesome Laptop", "links": [ { "rel": "self", "href": "/products/21/reviews/35", "method": "get" }, { "rel": "like", "href": "/products/21/reviews/35", "method": "post" } ] }, { "review_id": 36, "user_id": 629, "rating": 4.5, "content": "Great Performance", "links": [ { "rel": "self", "href": "/products/21/reviews/36", "method": "get" }, { "rel": "like", "href": "/products/21/reviews/36", "method": "post" } ] } ], "links": [ { "rel": "self", "href": "/products/21/reviews", "method": "get" }, { "rel": "product", "href": "/products/21", "method": "get" }, { "rel": "add-review", "href": "/products/112/reviews", "method": "post" } ]}For collection resources, the response is usually structured as an array. But here, it's no longer an array but an object with two main properties: reviews and links. The reviews property has the actual collection array. The links property has the URIs to perform further actions in the context of the reviews collection.

So for example, rel: "self" is the URI to the current reviews collection. rel: "product" is like a BACK TO PRODUCT DETAILS link to go back one step and fetch the product details again. rel: "add-review" is an action that the user can take.

But apart from this, did you notice that each individual review inside the reviews array also has a links property? There are two rel URIs for each review. The rel: "self" URI allows the user to fetch more details about that particular review. rel: "like" allows the user to mark the review as helpful.

This is how HATEOAS enables clients to explore an API. A response from any API endpoint allows them to explore the API further and also presents options to go back a step if needed.

What is the advantage of implementing HATEOAS?

So what is the advantage of doing all of this? It seems useful but certainly tedious to implement.

The answer is Independent Evolvability.

If HATEOAS is implemented properly on both the server and the client side, then the client no longer needs to invoke APIs using harcoded URIs. Instead, it uses the URIs associated with the rel properties to invoke other API endpoints.

If at a later point in time, the API designers for whatever reason decide to change the URI structure, the clients will remain unaffected because they do not rely on the URIs directly.

From our previous example of product reviews, let's suppose that the API designers decide to change the resource name from reviews to ratings like this:

GET /products/21/ratingsSince the server and client have both implemented HATEOAS properly, this change won't cause any breaking changes on the client-side because the clients would have used the URIs associated with rel properties.

Also, it's not just about the URIs. The API can even change the logic behind exposing certain actions to the end-user and if the clients have followed HATEOAS properly, then nothing will break on the front-end.

Again referring to the previous example of products, we know that the current logic dictates that if the product is not in stock, then the rel: "add-to-cart" URI will not be included in the links array in the response.

But suppose the API designers decide to restrict the current authenticated user from ordering a product if the person resides in a quarantine zone due to Corona virus restrictions, they can simply omit the rel: "add-to-cart" URI from the response even though the product is in stock. Again, if the client has followed HATEOAS properly, then nothing will break on the client-side.

This way, the client and the API server can continue to evolve independently.

Implementing HATEOAS in the Thunder Notes API

We'll first target our singleton note resource i.e. /notes/{id}.

HATEOAS for /notes/{id} endpoints

We'll create a new controller file called hateoasControllers.js where we'll store the logic behind making our responses, HATEOAS-compliant. Go ahead and create that file inside the controllers folder and add the below function definition in it.

/* Adds the `links` property to a note object */exports.hateoasify = responseNote => { const links = [ { rel: "self", href: `/notes/${responseNote.id}`, method: "GET" }, { rel: "edit-text", href: `/notes/${responseNote.id}`, method: "PATCH" }, { rel: "update", href: `/notes/${responseNote.id}`, method: "PUT" }, { rel: "delete", href: `/notes/${responseNote.id}`, method: "DELETE" }, { rel: "notes", href: `/notes`, method: "GET" } ];

return { ...responseNote, links };}This method will take a note object as an input and add a property called links to it. This property will point to an array of relative-URIs which will expose options like updating or deleting the note.

Let's import this new method at the top in noteHandlers.js

const { hateoasify } = require( "../controllers/hateoasControllers" );Now in the getNote() handler function, before returning the note object in the response, pass it as an argument to the hateoasify() method that we've defined just now.

exports.getNote = ( req, res ) => { // Use the `res.locals` object to get the note provided by the // `noteValidation` middleware. res.json( hateoasify( res.locals.note ));}Do the same thing for the createNote() handler function.

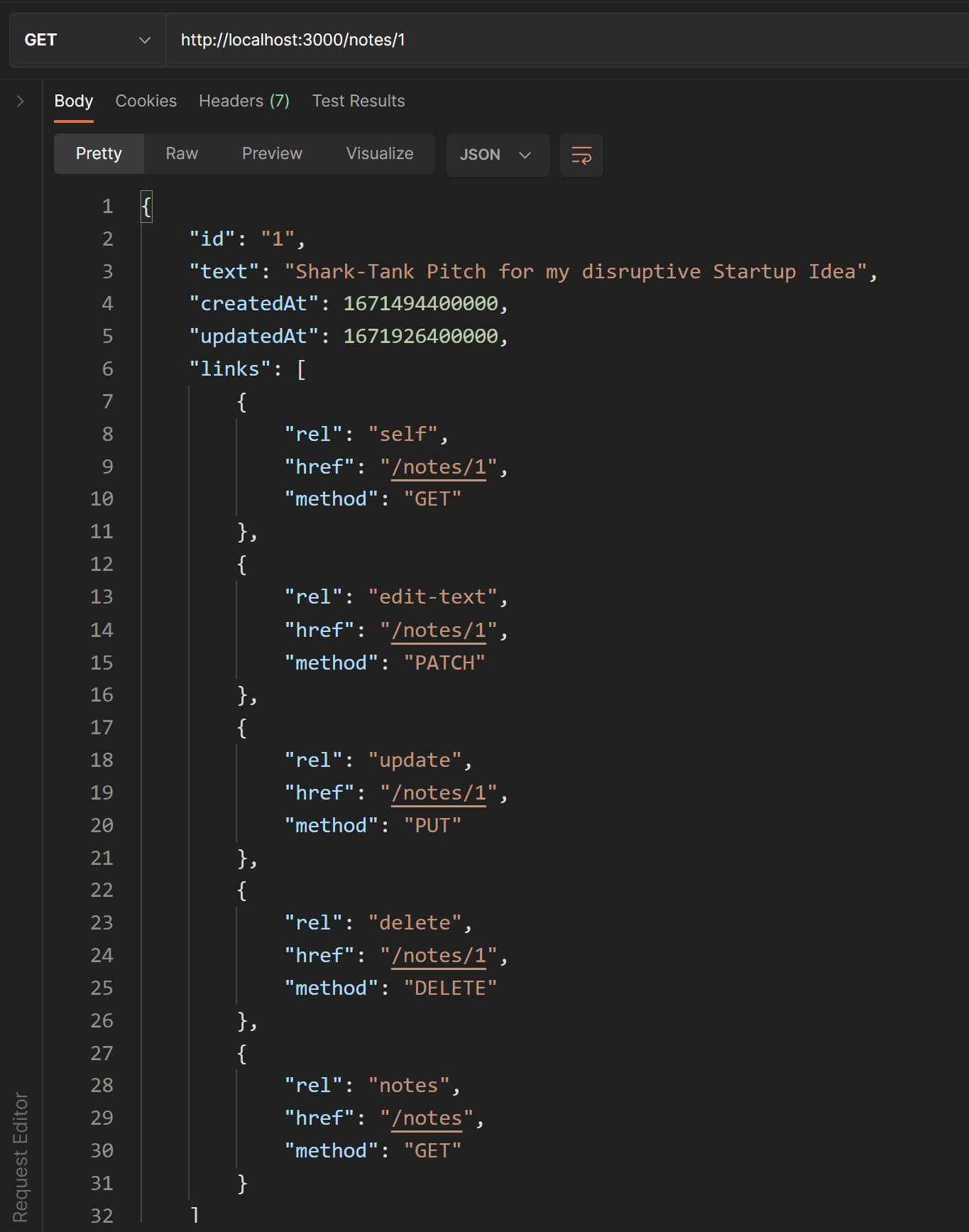

exports.createNote = ( req, res ) => { ... ... res.status( 201 ).json( hateoasify( newNote ));}Back in Postman, let's test the GET request to /notes/1. Notice the links property in the response JSON.

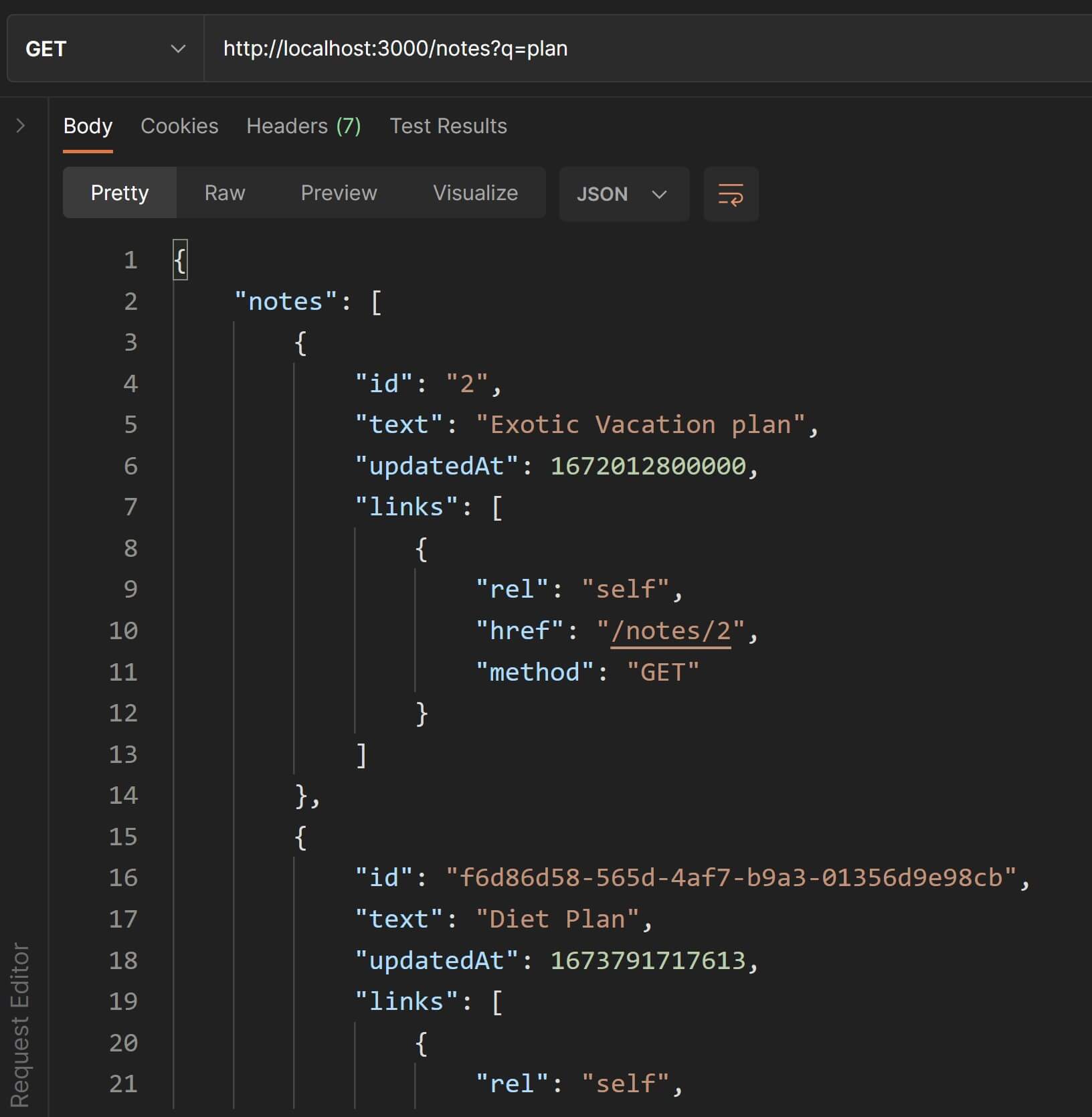

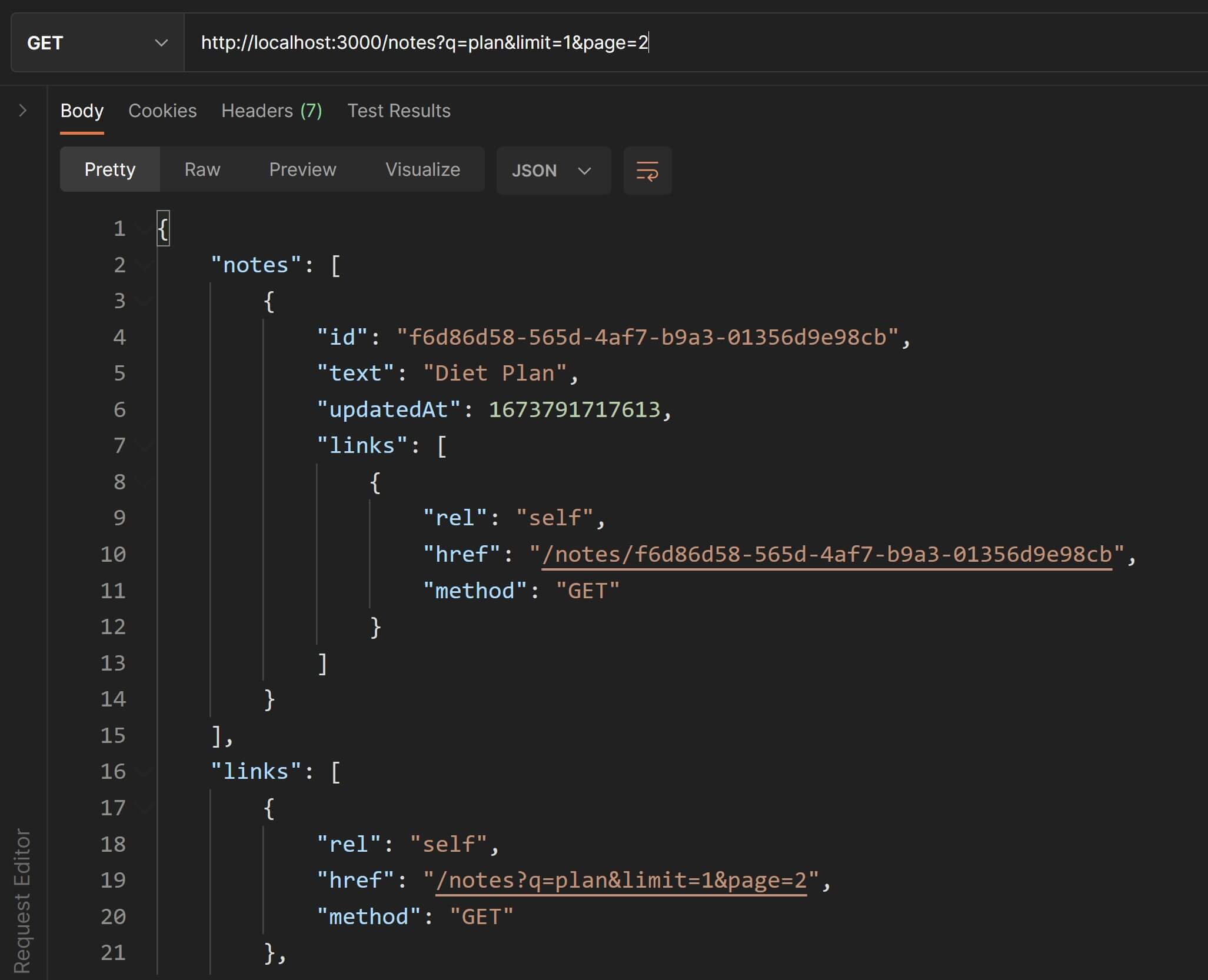

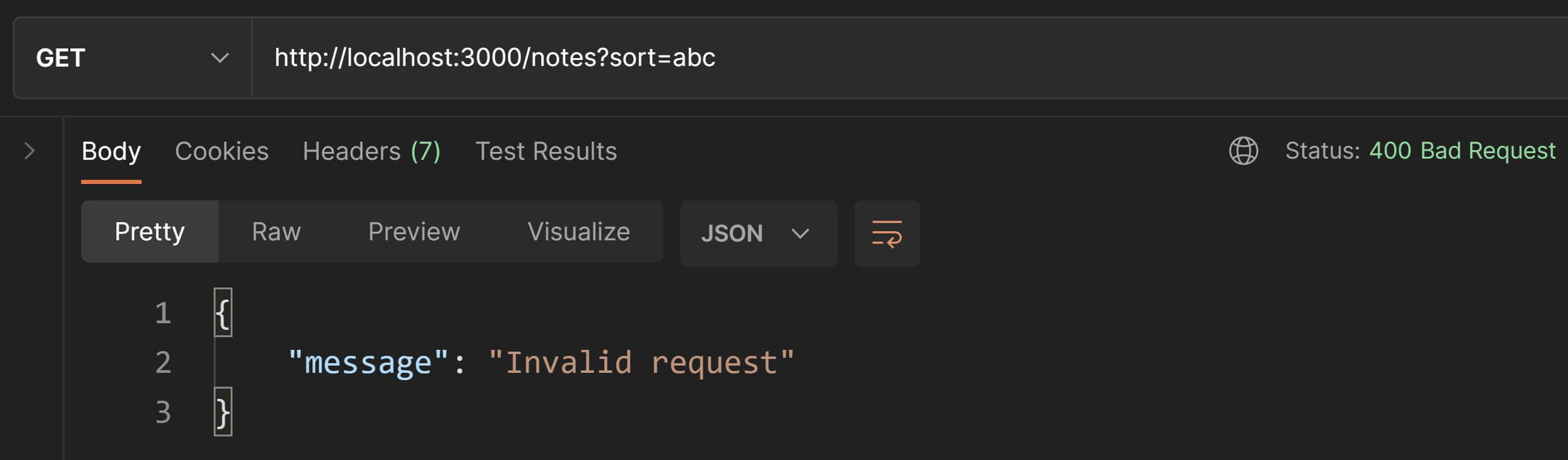

Now let's try to send a POST request to create a new note. The new note object in the response will contain the same HATEOAS links as in the previous GET request.

Great🤘! We have made our /notes/{id} endpoints HATEOAS compliant.

HATEOAS for the /notes endpoint

Since we have to treat singleton and collection resources differently while making their representations HATEOAS-compliant, we'll rename the previous method from hateoasify() to hateoasifyNote() and create a new method called hateoasifyNotes() for collection resources.

We'll still have a hateoasify() method which will simply call the above two functions, depending upon the type of resource i.e. an array(collection) or an object(singleton).

Let's define these three functions in hateoasControllers.js.

Rename the hateoasify() method to hateoasifyNote(). Also, we'll no longer need to export this function so convert it to a simple function declaration. The rest of the function definition will remain the same.

function hateoasifyNote( responseNote ) { ... ...}Next, we'll add a hateoasifyNotes() method. This method will add a links property to the response for the entire collection as well as a links property for each note within the collection. We'll not export this function either.

/* Adds the `links` property for a collection of notes and for each note within it.*/function hateoasifyNotes( responseNotes ) { // Add a `links` array to each individual note object within the collection. // The `self` URI can be used to fetch more info about the note. responseNotes.forEach( n => { n.links = [{ rel: "self", href: `/notes/${n.id}`, method: "GET" }] });

// Add a "links" array for the entire collection of notes. const links = [ { rel: "self", href: `/notes`, method: "GET" }, { rel: "add-note", href: "/notes", method: "POST" }, ];

return { notes: responseNotes, links };}Now we'll add the new hateoasify() method which will simply invoke either hateoasifyNote() or hateoasifyNotes() depending on whether the input argument is an object or an array. Note that this is the only method we are going to export.

/* Adds HATEOAS links to a collection of notes or an individual note. */exports.hateoasify = ( response ) => { const isCollection = Array.isArray( response );

return isCollection ? hateoasifyNotes( response ) : hateoasifyNote( response );}Now back in noteHandlers.js, there will be no changes to getNote() and createNote() because the call to hateoasify() will correctly call hateoasifyNote() and add links for the singleton note resource endpoint.

But now that we have implemented hateoasifyNotes(), let's make the response of the /notes collection endpoint HATEOAS-compliant as well by calling hateoasify().

Replace the method definition for getNotes() handler function with this:

exports.getNotes = ( req, res ) => { // get all the notes in the database const notes = notesCtrl.getAllNotes(); // send a `200 OK` response with the note objects in the response payload res.json( hateoasify( notes ) );}Since this is a collection, hateoasify() will in turn invoke hateoasifyNotes().

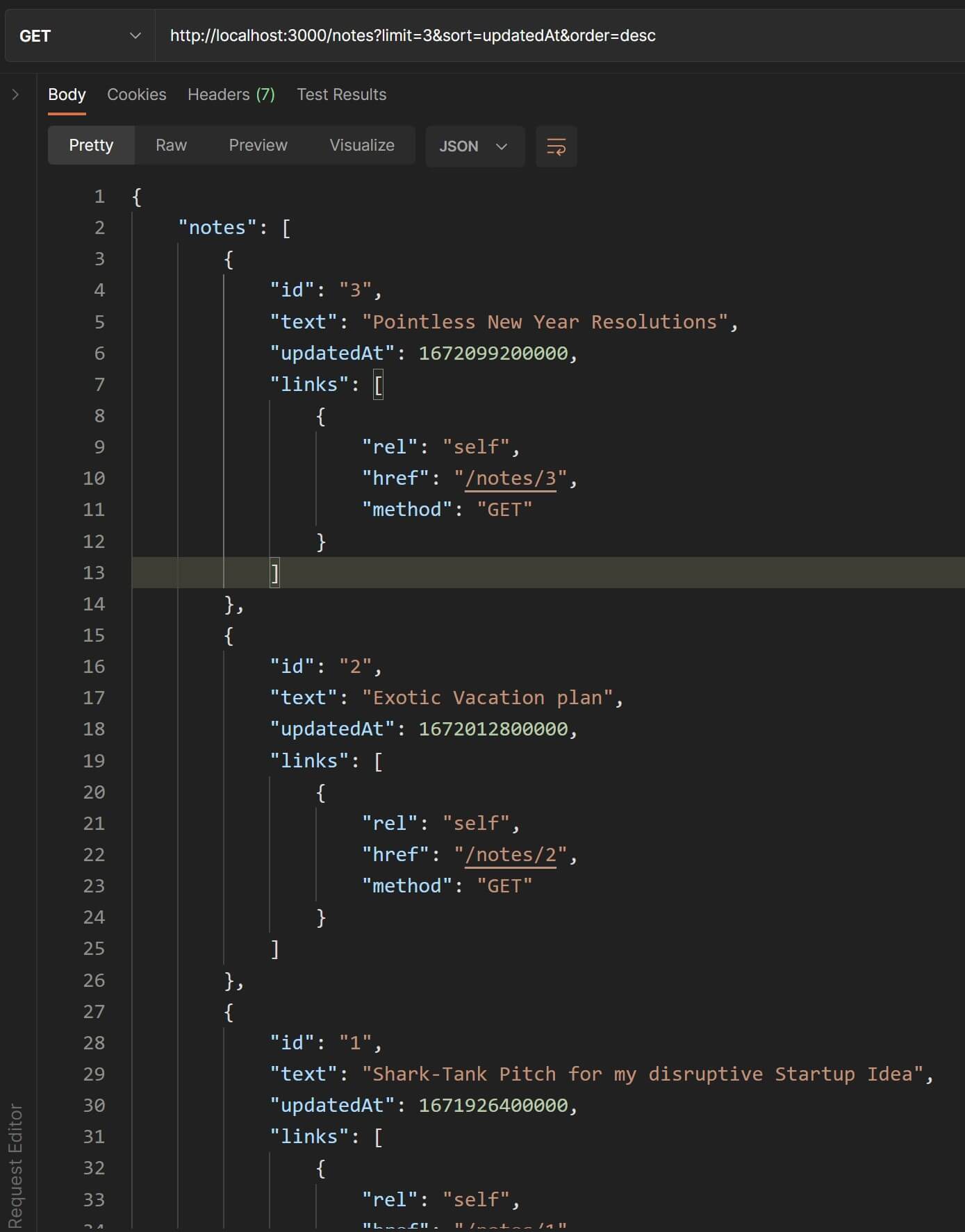

Let's test this out in Postman. If you send a GET request to the /notes endpoint, you should see a response like the one below(no screenshot since the response is quite lengthy).

{ "notes": [ { "id": "1", "text": "Shark-Tank Pitch for my disruptive Startup Idea", "createdAt": 1671494400000, "updatedAt": 1671926400000, "links": [{ "rel": "self","href": "/notes/1","method": "GET" }] }, ... ... ... { "id": "5", "text": "Parenting 101", "createdAt": 1671840000000, "updatedAt": 1672272000000, "links": [{ "rel": "self", "href": "/notes/5", "method": "GET" }] } ], "links": [ { "rel": "self","href": "/notes","method": "GET" }, { "rel": "add-note","href": "/notes","method": "POST" } ]}Awesome🙌! We've successfully implemented HATEOAS for the /notes collection endpoint.

HATEOAS links in error responses

You can also choose to include HATEOAS links in error responses.

For example, the 404 Not Found response for GET /notes/{id} can include a rel: "notes" URI that will serve as a BACK TO ALL NOTES link if the client or user tried to access a note that doesn't exist.

{ "message": "Note with ID '123' does not exist.", "links": [ { "rel": "notes", "href": "/notes", "method": "GET" } ] }This response not only informs the client that the requested resource was not found, but also provides a URI to follow next.

HATEOAS Specifications

While you can certainly structure HATEOAS-compliant API responses in the format that we have seen in our examples or extend or customize it to better fit your personal or project requirements, there are some specifications that define certain standards and guidelines for structing HATEOAS-compliant responses. Some of these are:

For example, Netflix's Genie REST API uses the HAL approach.

For reference, check out this article with more information on choosing a hypermedia format.

HATEOAS using frameworks/libraries/plugins

Our HATEOAS implementation in the Thunder Notes API works well for our little tutorial demo but when building a real-life full-scale API, you may need to use a plugin, framework, library, etc. that will be able to simplify the process of making your API HATEOAS-compliant.

For example, there is an NPM-package called express-hateoas-links that extends ExpressJS functionality and makes it easier to append HATEOAS links to API responses.

The language or API framework that you decide to use for your API may have similar extensions to achieve this.

Irrespective of which HATEOAS specification or library or framework you choose, the purpose will always remain the same, which is to enable explorability and discoverability in the API.

Congratulations🥳! HATEOAS was the last remaining sub-constraint and by successfully applying it to the Thunder Notes API, we have completely implemented one of the most important core REST constraints i.e. Uniform Interface. To recap, so far we have implemented two main REST constraints, Client-Server and Uniform Interface.

Authentication and Authorization

In most cases you'll need some form of security mechanism in order to protect your API resources from unauthorized access unless you plan to design a public API that provides unrestricted access to its resources.

This is where authentication and authorization come into play.

Authentication involves verifying the identity of the user/client and confirming that the user is who he/she claims to be. For example, when you login to your email account, you provide your username and password and prove that you are the owner of your account.

Authorization means making sure the user/client has access to a particular resource. For example, you may be able to login as a customer on a website but you still won't have access to their admin backend because that would require elevated authorized access.

In the context of APIs, authorization becomes mandatory for protected resources. Authentication will be required if the entire application supports the notion of user accounts(registrations and logins).

Also, an API server needs to authorize access not just for the users of the client applications but also for the clients themselves. This is typically done to ensure that a malicious "Evil Co." client app cannot call and use private APIs without first registering and verifying its identity.

The Stateless core REST constraint mandates that REST APIs not store any information pertaining to the current user session and that user sessions should be managed at the client-side. Every request, even from the same authenticated user, is treated as a new request. This means that every request must carry all the necessary information to authenticate and authorize the user and enable access to the API resource. All the approaches we'll study next will be based around this central idea.

There are different ways in which an API server can implement authentication and authorization to verify the identity of client applications and its users. They are:

- Basic Authentication

- API Keys

- Bearer authentication

- OAuth( 2.0 )

- HMAC Authentication

- Others

Let's look at these approaches in more detail.

1. Basic Authentication

As the name suggests, this is a very simple authentication mechanism where the client sends the username and the password of the user with every API request in the form of a base64 encoded string in the request header.

The API server reads this string and authenticates the user and if the credentials are valid, it allows access to the protected resource.

For example, if the username is saurabh and password is 123456, then the client will encode the string saurabh:123456 using base64 and place it in the Authorization request header as

Authorization: Basic c2F1cmFiaC8xMjM0NTY=Since base64 is not difficult to decode, this scheme should always be used over an HTTPS connection.

As a real-life usage example, Github's API makes use of basic authentication for testing in a non-production environment.

Also, Twitter's API makes use of basic authentication for some of their API services.

2. API Keys

An API key is a long string of alpha-numeric characters which may also contain special characters and which seems cryptic to a human.

In this mechanism, the API server generates and assigns an API key to a client that needs access.

The client can then send this API Key with every request in order to authenticate itself.

Please note that API keys are typically used to authenticate API client applications like websites or mobile apps and NOT their customers or users. Customer/users of API consumer applications are typically authenticated using token-based mechanisms which we'll check out next.

Reference: https://cloud.google.com/endpoints/docs/openapi/when-why-api-key

The API key can be included in the request in many ways like:

- Query string parameter

X-Api-Keycustom request header- Bearer token

- Request Body

2.1. Query string parameter

The simplest way is including the API key as a query string parameter:

GET "https://example.com/api/resource?api_key=505000a6-deca-43a1-b6f1-5766ff5e030e"The drawback of this method is that it increases the risk of the API key being exposed.

The Trello API uses this approach for its API services.

2.2. X-Api-Key custom request header

One of the most popular choice by convention is to use this custom header in the request and set its value to your API key.

GET "https://example.com/api/resource"X-Api-Key: 505000a6-deca-43a1-b6f1-5766ff5e030ePlease note that this is not a registered HTTP header but widely used as a convention.

2.3. Bearer token

The API key can also technically be used as a Bearer token in the Authorization header as:

GET "https://example.com/api/resource"Authorization: Bearer 505000a6-deca-43a1-b6f1-5766ff5e030eWe'll learn more about Bearer Authentication after this section.

2.4. Request body🚫

There are some APIs that require that the API key be included in the body of the request. This is not a good practice as it requires that the clients make POST requests even for fetching data. It also mixes authentication with the actual request payload data. For these reasons, you should avoid using this approach.

POST "https://example.com/api/resource"

{ "api_key": "505000a6-deca-43a1-b6f1-5766ff5e030e", ... ...}3. Bearer authentication

Bearer authentication or Token authentication is a mechanism that uses bearer tokens to gain access to protected API resources and is one of the most widely used authentication approaches out there.

Bearer tokens are encrypted strings that are generated by the API server and assigned to a user typically in response to a login attempt at the client-side.

The client then includes the access tokens in every request to the API

GET "https://example.com/api/resource"Authorization: Bearer 505000a6-deca-43a1-b6f1-5766ff5e030eThe word bearer simply means that the "bearer of this token" should be authorized to access the protected resource.

The token that is used as the bearer token can have different formats. One of the most popular choices for token formats is JWT or JSON Web Tokens.

JSON Web Tokens

JWT is a token format or a way of arranging information in an authentication token.

Here is a sample JSON Web Token

eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiaWF0IjoxNTE2MjM5MDIyfQ.SflKxwRJSMeKKF2QT4fwpMeJf36POk6yJV_adQssw5cAs you can see it is comprised of 3 parts that are separated by a period("."):

- Header

- Payload

- Signature

Let's discuss each of these parts in more detail.

Header

The header contains information about the encryption algorithm and the token format. It is Base64 encoded to form the first part of the JWT. Here is the decoded version of the header in the above JWT:

{ "alg": "HS256", "typ": "JWT"}Payload

The payload typically contains some basic properties about the logged in user. Just like the header, the payload is also Base64 encoded to form the second part of the JWT. Here is the decoded version from our sampe JWT:

{ "sub": "1234567890", "name": "John Doe", "iat": 1516239022}There are some standard guidelines in place for the names of the properties inside the header and payload. This is why we have used keys like sub which stand for subject of the JWT which in this case is "the user" and the value for this key is the user's ID. iat stands for time the JWT was issued at. It's ok if you use custom names for keys but as much as possible, you should try and stick to the standardized key names.

Please note that you should never include sensitive user information in the payload.

Signature

The third part requires that you take the header and the payload and sign them with a secret key using the chosen encryption algorithm.

HMACSHA256( base64UrlEncode( header ) + "." + base64UrlEncode( payload ), secret)Once you have the Base64 encoded header, payload and signature strings, you concatenate them with a period and you have yourself a JSON Web Token.

When a user signs in, the API service responsible for authentication is invoked. If the credentials are valid, the API server will generate this token the way we have discussed above i.e. by using a secret key that only the API server knows and send this token back in the response.

Now there is no standard regarding how the server should send this token back to the client but here are some popular choices:

- Custom Response Header(

X-Access-TokenorX-Auth-Token) - The Response body

Custom Response Header( X-Access-Token or X-Auth-Token )

You can choose to put your token as a value in a custom header named X-Access-Token or X-Auth-Token.

X-Access-Token: eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiaWF0IjoxNTE2MjM5MDIyfQ.SflKxwRJSMeKKF2QT4fwpMeJf36POk6yJV_adQssw5cPlease note that these are not a registered HTTP headers but widely used as a convention.

The Response body

You can put the token in the response payload as a JSON object. As a real-life example, the FEDEX API uses this approach.

{ "access_token": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiaWF0IjoxNTE2MjM5MDIyfQ.SflKxwRJSMeKKF2QT4fwpMeJf36POk6yJV_adQssw5c", "token_type": "Bearer", "expires_in": 3600}But why not use the Authorization header to send the token from the server to the client?🤔

The Authorization header is typically meant to be used for requests sent by the client to the server and not the other way around.

Here is how MDN defines its purpose:

The HTTP Authorization request header can be used to provide credentials that authenticate a user agent with a server, allowing access to a protected resource.

To see a fantastic demo of what JWTs are and how they work, head over to the JWT debugger.

4. OAuth( 2.0 )

OAuth 2.0 is an authorization protocol that is consided an industry-standard for authorization and is adopted by Google, Twitter and a whole lot of other big names out there.

A good example of OAuth in action is the Stack Overflow login screen.

It presents you options to login using either Google, Github or Facebook.

Say for example you choose to login with Google, then you'll be redirected to Google's login page where you'll enter your Google account credentials.

If your credentials are valid, Google will ask for your approval to allow Stack Overflow to gain access to some basic information like your name and email from your Google Account.

If you approve, Google will send this information to Stack Overflow which will in turn allow you to login.

Explaining the full OAuth flow is beyond the scope of this article so I'll try to explain all of that in a future blog post.

Till then, here are some great blog posts that dive into more detail about what OAuth is and how to implement OAuth in a NodeJS project.

5. HMAC Authentication

HMAC stands for Hash-based Message Authentication Code.

The central idea here is that the API server generates and assigns a unique private key to each client.

The client then uses its private key and generates an HMAC value by applying a hashing operation on information such as the username, the request body, a nonce, current timestamp, etc.

This HMAC value is then included in the request along with certain publicly identifiable information such as a username.

The server also generates its own version of the HMAC by using the same private key assigned to the client and if the client's HMAC value matches the one generated by the server, the request stands authenticated.

HMAC authentication is typically used as an additional layer of security along with HTTPS.

For reference, check out this article with more information on implementing authentication using HMAC.

6) Other Authentication Methods

We have discussed the most common authentication methods but for your reference, here is a list of a few alternate options for authentication along with the ones we have seen above.

7) Implementing Authentication using JWT in the Thunder Notes API

It's time to actually see authentication in action in our tutorial REST API. We'll implement authentication using JWT for the purposes of this tutorial.

The functionality for signing and verifying JSON Web Tokens is enabled in our sample API using an NPM library called jsonwebtoken. It's already installed as a part of the starter files and ready to use.

So far, notes was the only data entity and resource we were dealing with but now, we'll introduce the concept of users in the Thunder Notes API. As I had mentioned previously, our data in /data/data.js also has entries for some pre-defined users in the system.

Let's create a new API service that will authenticate the user and generate a JWT token.

Create a new file called authHandler.js within the handlers folder and paste this content into it.

const jwt = require( "jsonwebtoken" );const usersCtrl = require( "../controllers/usersController" );

exports.authenticate = ( req, res ) => { // route handling logic goes here.}We'll add the definition later. Let's hook this up to a route first.

In routes.js, import this authHandler.js file at the top.

const { authenticate } = require( "./handlers/authHandler" );After this add this new route.

router.post( "/auth", authenticate );Let's discuss the logic behind using this specific resource name and URI structure. After all remember, Identification of Resources is one of the first steps in designing a REST API.

As discussed previously in this article, resources should be named as nouns and should be plural. auth stands for authentication and is a noun and has no plural form so simply using the singular form is fine for our purposes.

Some other examples of URI structures we can use are:

POST /users/{id}/session

OR

POST /users/{id}/token

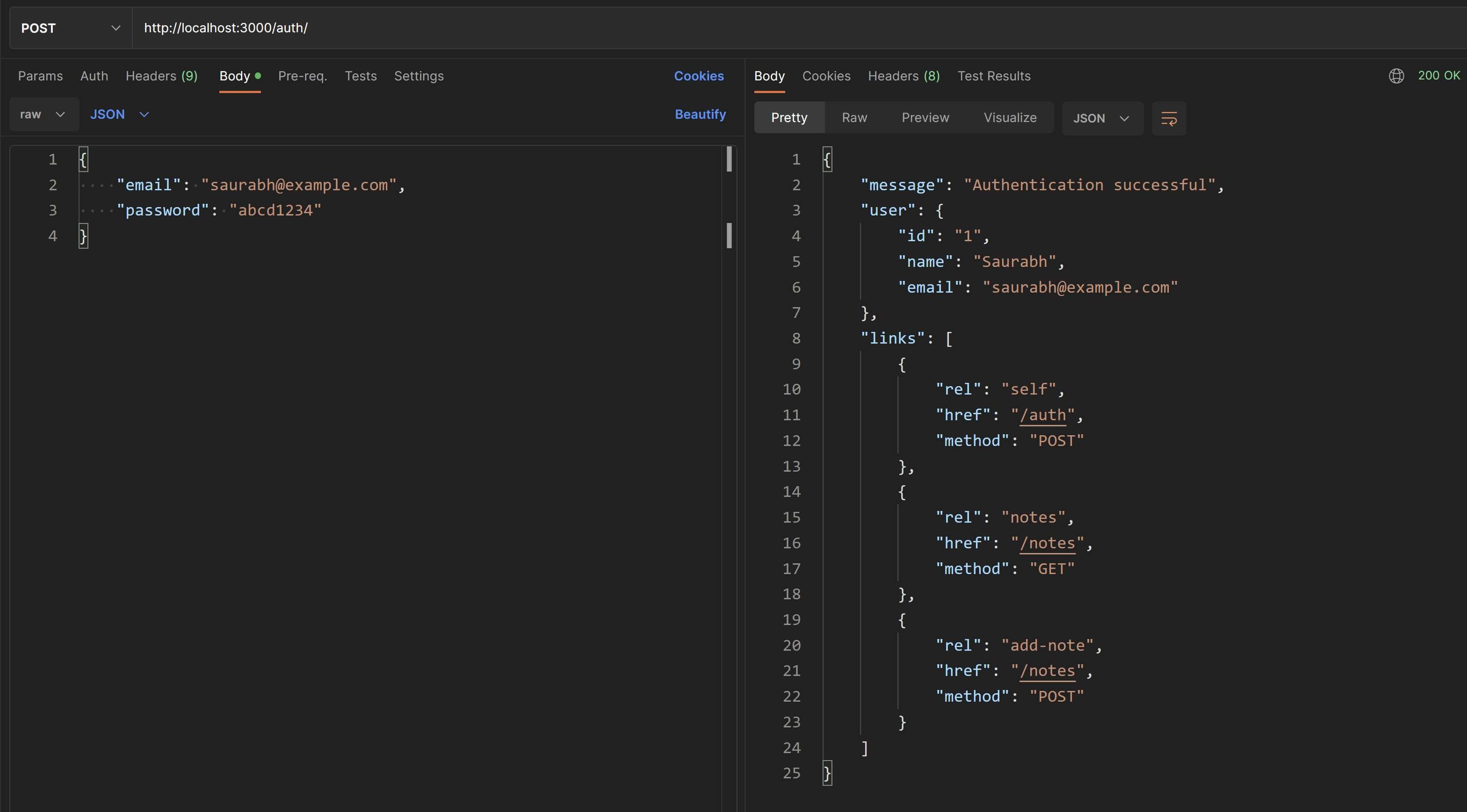

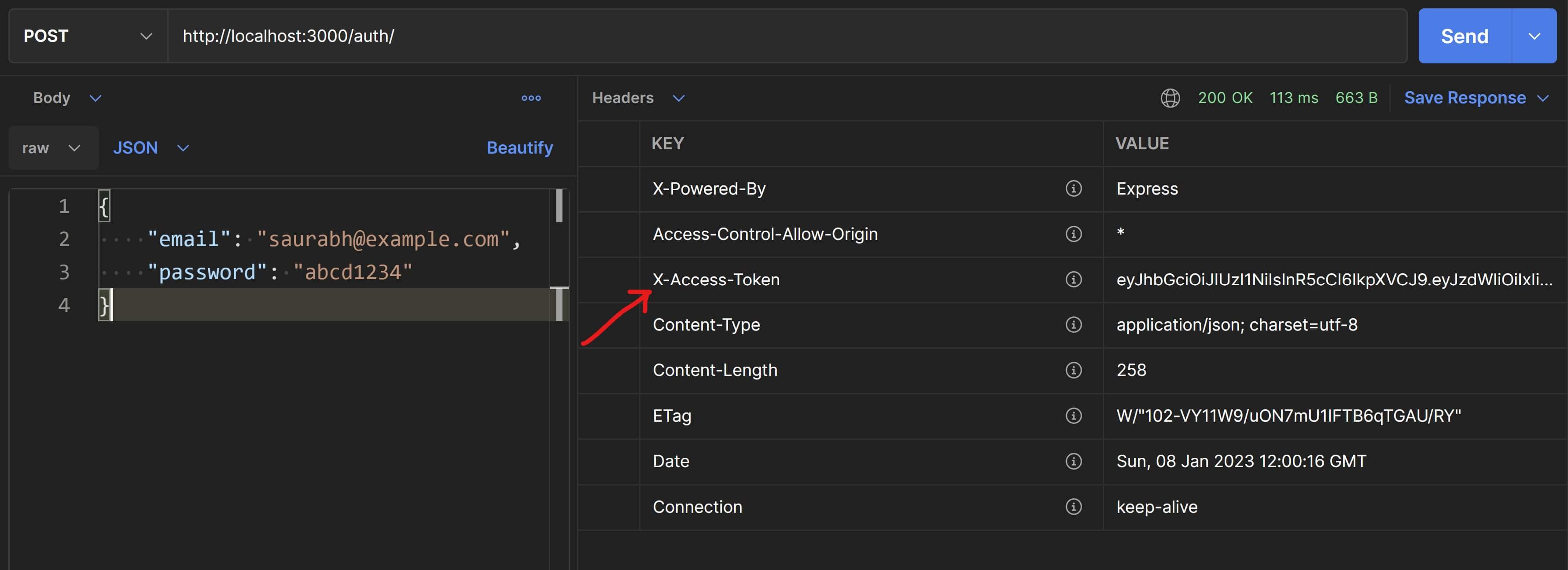

This new API service will accept the user's email and password as inputs. If the user is authenticated successfully, then the service will generate a JSON Web Token and send it to the client. We'll use the X-Access-Token header aproach to send this token from the server to the client in the response.

Let's add the definition for the new route handler function in authHandler.js. Replace the previous declaration with this one.

/** * This is the route handler for the `/auth` route. * Accepts the user's email and password as inputs. * Upon successful validation, returns a JWT token in the response header. * The encrypted token payload contains the user's ID and name. * Also included in the response is a `links` array for the next steps * that the client can take like "add a new note" or "fetch all notes for this user". */exports.authenticate = ( req, res ) => { // throw `400 Bad Request` if either "email" or "password" is not provided. if( !( "email" in req.body && "password" in req.body ) ) { return res.status( 400 ).json({ "message": "Invalid request" }); }

const { email, password } = req.body;

// check whether a user with this email exists. // normally the password will be hashed before the comparison. // but we are storing the password directly without hashing for convenience // while testing and for minimizing implementation details. const user = usersCtrl.getUserByCredentials( email, password ); if( !user ) { return res.status( 401 ).json({ "message": "Invalid credentials" }); }

// Use the private key to sign payload and generate the JWT. // DO NOT INCLUDE any sensitive information in the payload. // Only include some basic info that can identify the user. const token = jwt.sign( { sub: user.id, name: user.name }, process.env.TN_JWT_PRIVATE_KEY ); // Configure a custom header in the response with the token value. res.setHeader( "X-Access-Token", token );

// HATEOAS links const links = [ { "rel": "self", "href": "/auth", "method": "POST" }, { "rel": "notes", "href": "/notes", "method": "GET" }, { "rel": "add-note", "href": "/notes", "method": "POST" } ]

// send a confirmation message, some basic user info and HATEOAS links in the response res.json({ message: "Authentication successful", user: { id: user.id, name: user.name, email: user.email }, links });}The process.env is a NodeJS-specific global variable which holds the environment variables for our API server(the ones we have declared in env.js).

Let's call this API service in Postman.

Also check the Headers tab for the value of the X-Access-Token header.

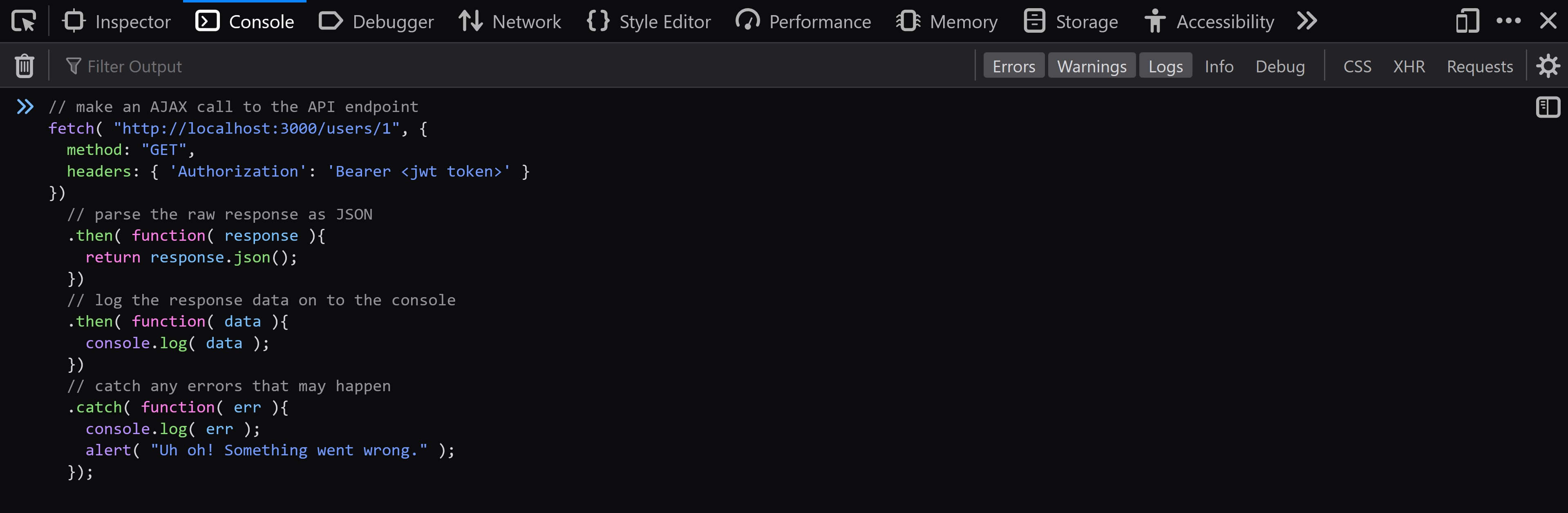

The client will read this auth token from the response header and will send it in the Authorization header as a part of any subsequent API requests. The server upon receiving these requests will check the Authorization header and if it finds a token in there, then it'll verify it.

So let's add some functionality so that the server can verify this token.

This verification step will need to be implemented before the route handler function begins its processing of the request. This sounds like a job for a middleware.

We have already created one middleware before called noteValidation.js and have already discussed what middlewares are and what purpose they serve.

Let's create a new file called auth.js within the middlewares/ folder and this code to it.

const jwt = require( "jsonwebtoken" );

/* This middleware function reads the token value from the `Authorization` header and verifies it. It attaches the token payload into the request object and passes it on to middlewares further up the chain. */module.exports = function verifyToken( req, res, next ) { // read the `Authorization` header. If not provided, return a `401 Not Authorized`. const authHeader = req.header( "Authorization" ); if( !authHeader ) { return res.status(401).send({ "message": "Access denied. No token provided." }); }

// The value will be in the format, `Bearer <token value>`. // Parse the token value by removing the text "Bearer ". const token = authHeader.replace( "Bearer ", "" ); try {

// verify the token const decodedPayload = jwt.verify( token, process.env.TN_JWT_PRIVATE_KEY ); /* In ExpressJS, the `res.locals` object is used to store/expose information that is valid throughout the lifecycle of the request. It is ideal for transferring information between middlewares. So we'll store the decoded payload in `res.locals.user` so that it can be used by middlewares further up the chain. */ res.locals.user = decodedPayload;

// call the next middleware next();

} catch( err ){ // if the token cannot be decoded, send `400 Bad Request` error. return res.status(400).send({ "message": "Access denied. Invalid token." }); }}Let's change our routes to include this new middleware. Replace the entire contents of routes.js with this:

const express = require( "express" );const router = express.Router();// route handlersconst { handleRoot } = require( "./handlers/rootHandler" );const { authenticate } = require( "./handlers/authHandler" );const noteHandlers = require( "./handlers/noteHandlers" );// middlewaresconst verifyToken = require( "./middlewares/auth" );const validateNote = require( "./middlewares/noteValidation" );

// root endpointrouter.get( "/", handleRoot );

router.post( "/auth", authenticate );

// notes or collection endpointsrouter.get( "/notes", verifyToken, noteHandlers.getNotes );router.post( "/notes", verifyToken, noteHandlers.createNote );

// singleton `note` endpointsrouter.get( "/notes/:id", verifyToken, validateNote, noteHandlers.getNote );router.put( "/notes/:id", verifyToken, validateNote, noteHandlers.updateNote );router.patch( "/notes/:id", verifyToken, validateNote, noteHandlers.editText );router.delete( "/notes/:id", verifyToken, validateNote, noteHandlers.deleteNote );

// `PUT` is typically not used with collection resources,// unless you want to replace the entire collection. // which is why we'll treat this as an invalid route.router.put( "/notes", verifyToken, noteHandlers.handleInvalidRoute );

module.exports = router;For singleton endpoints i.e. /notes/{id}, we have two middlewares. The request will first run verifyToken() and if the user is authorized, the control will move on to the next middleware i.e. validateNote(). Only once the request is validated will the control move on to run the route handler function.

For collection endpoints, we only have one middleware i.e. verifyToken().

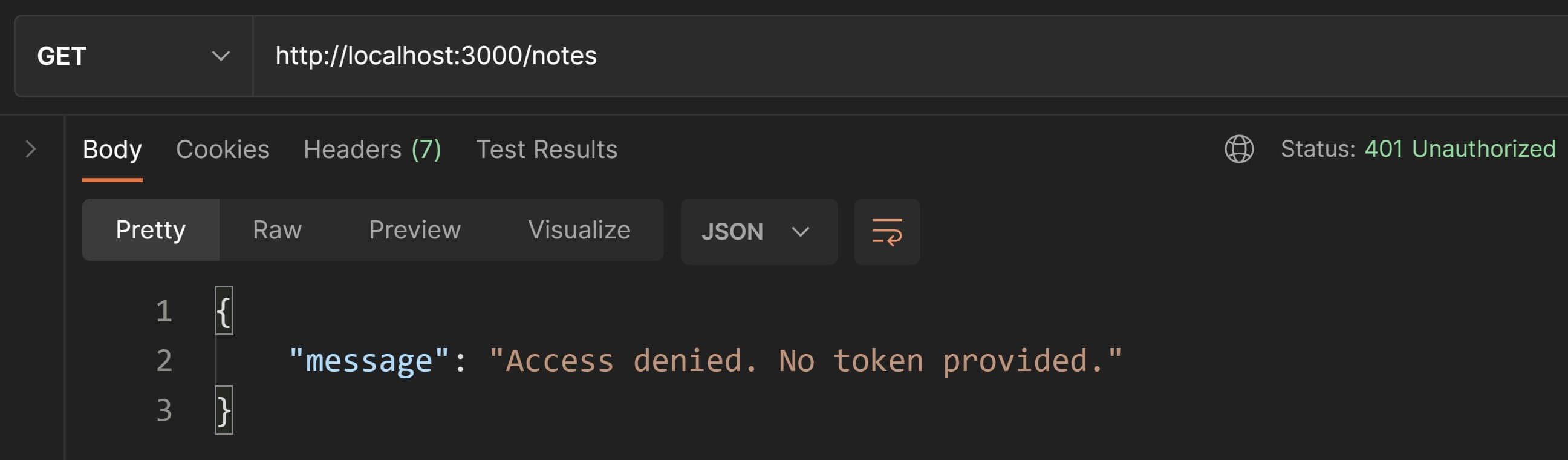

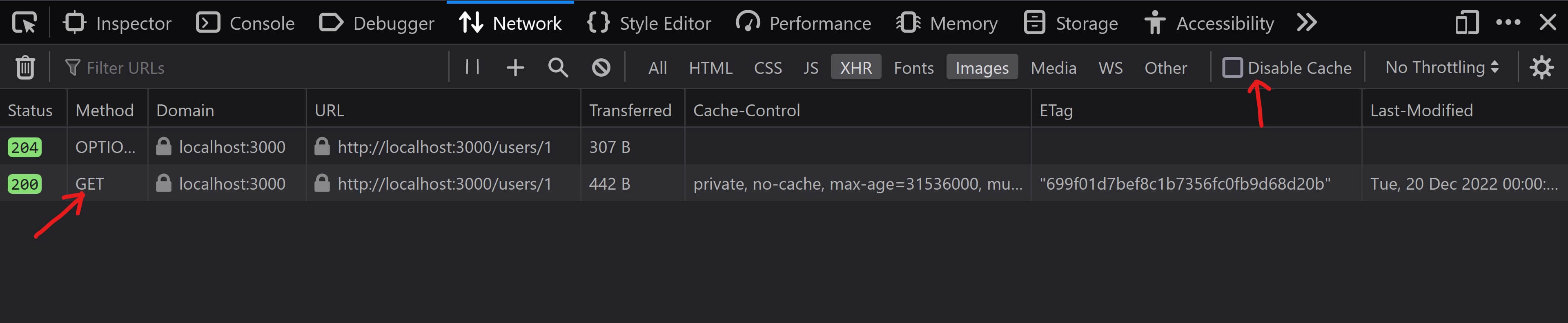

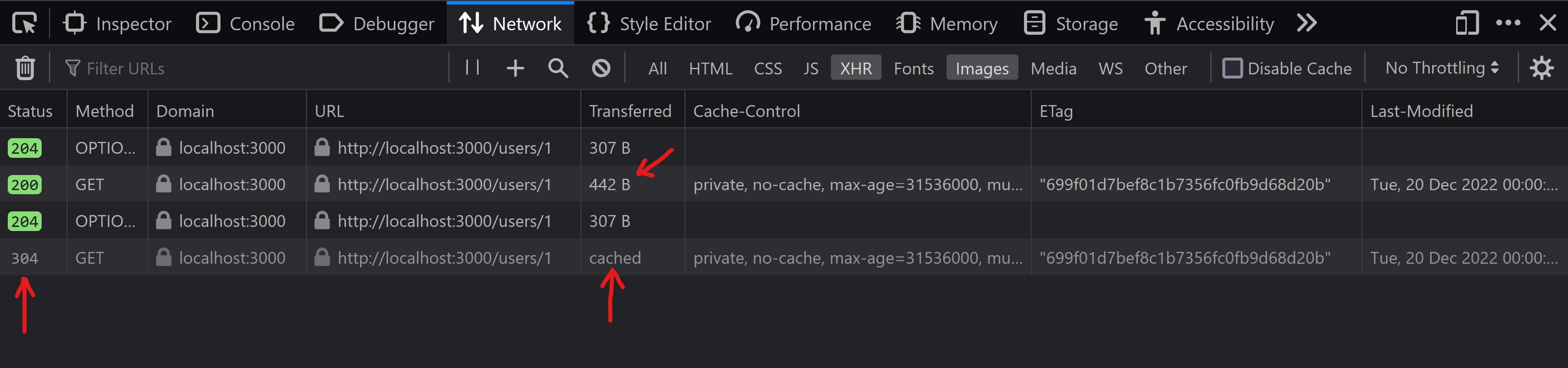

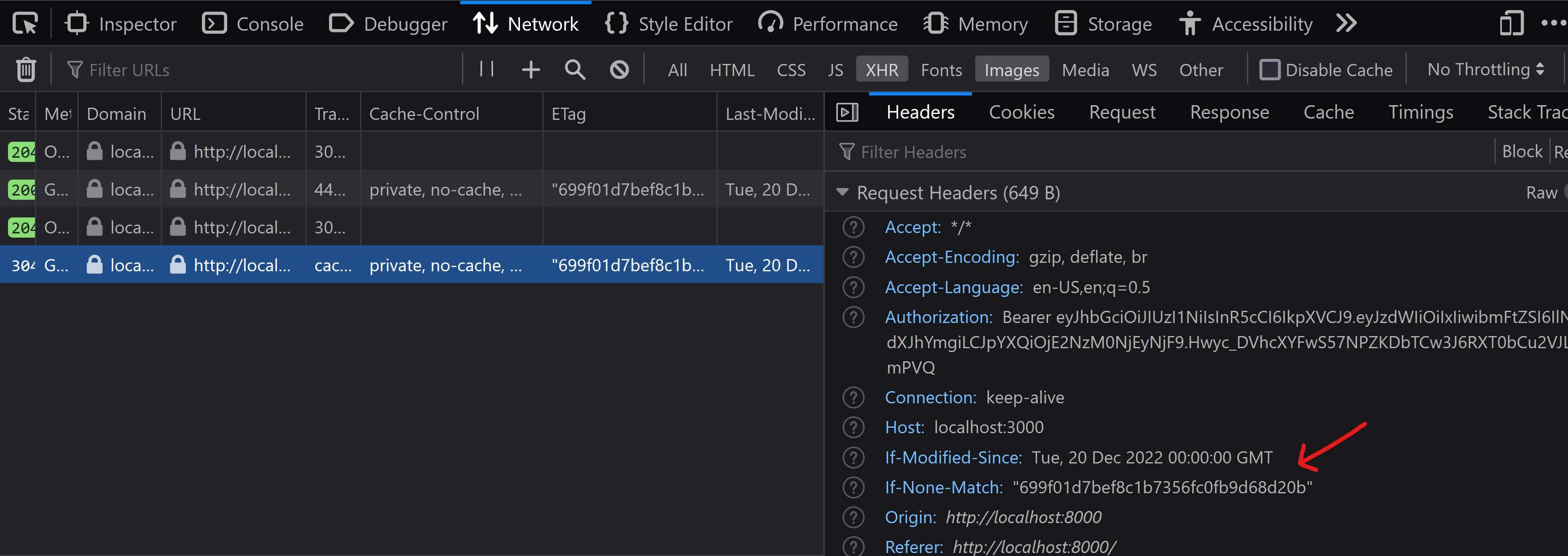

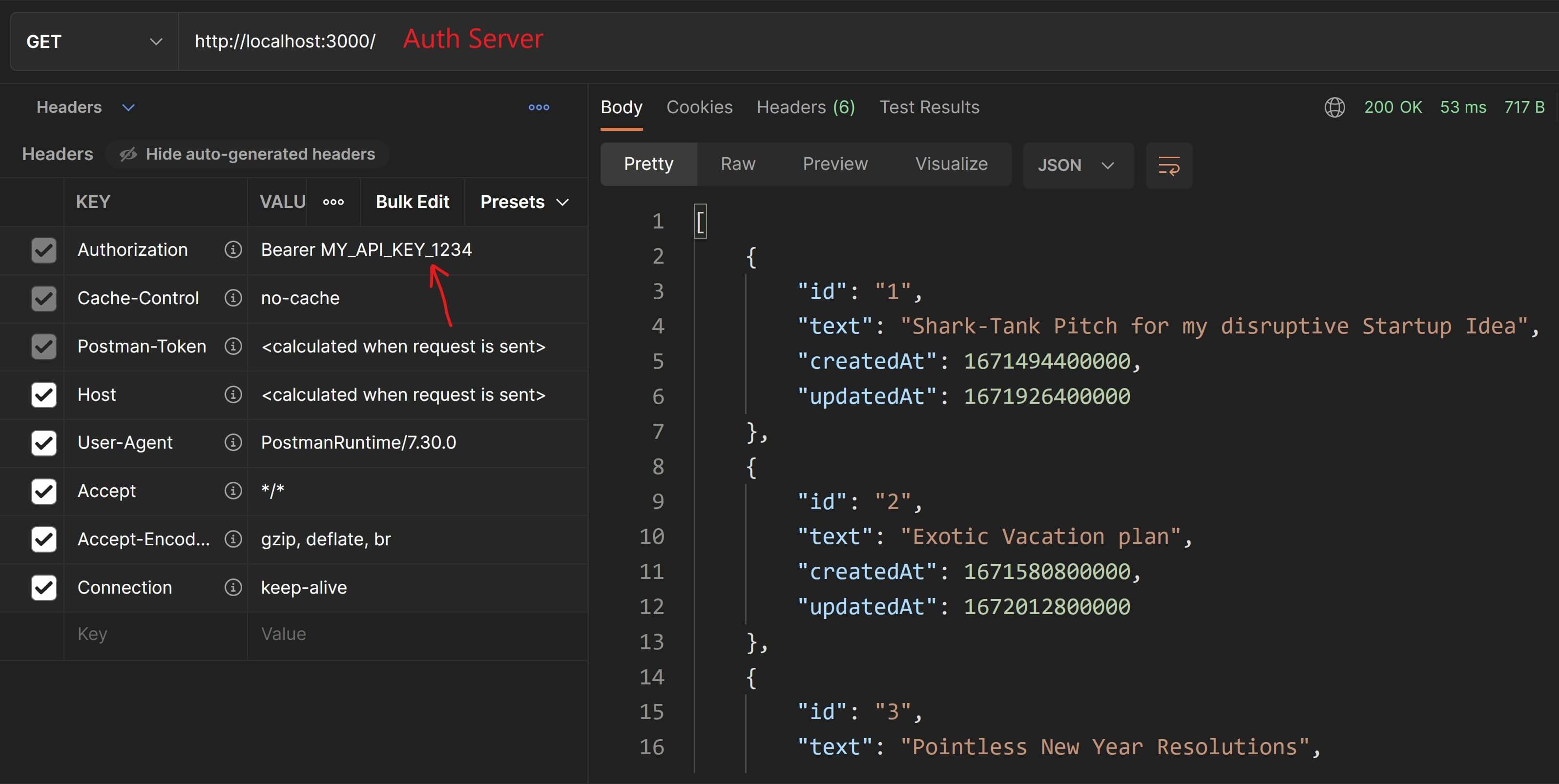

Let's test these changes in Postman. Try to fetch all notes and you should see an error as in the screenshot below.

Great👍! This means our authorization middleware is working.

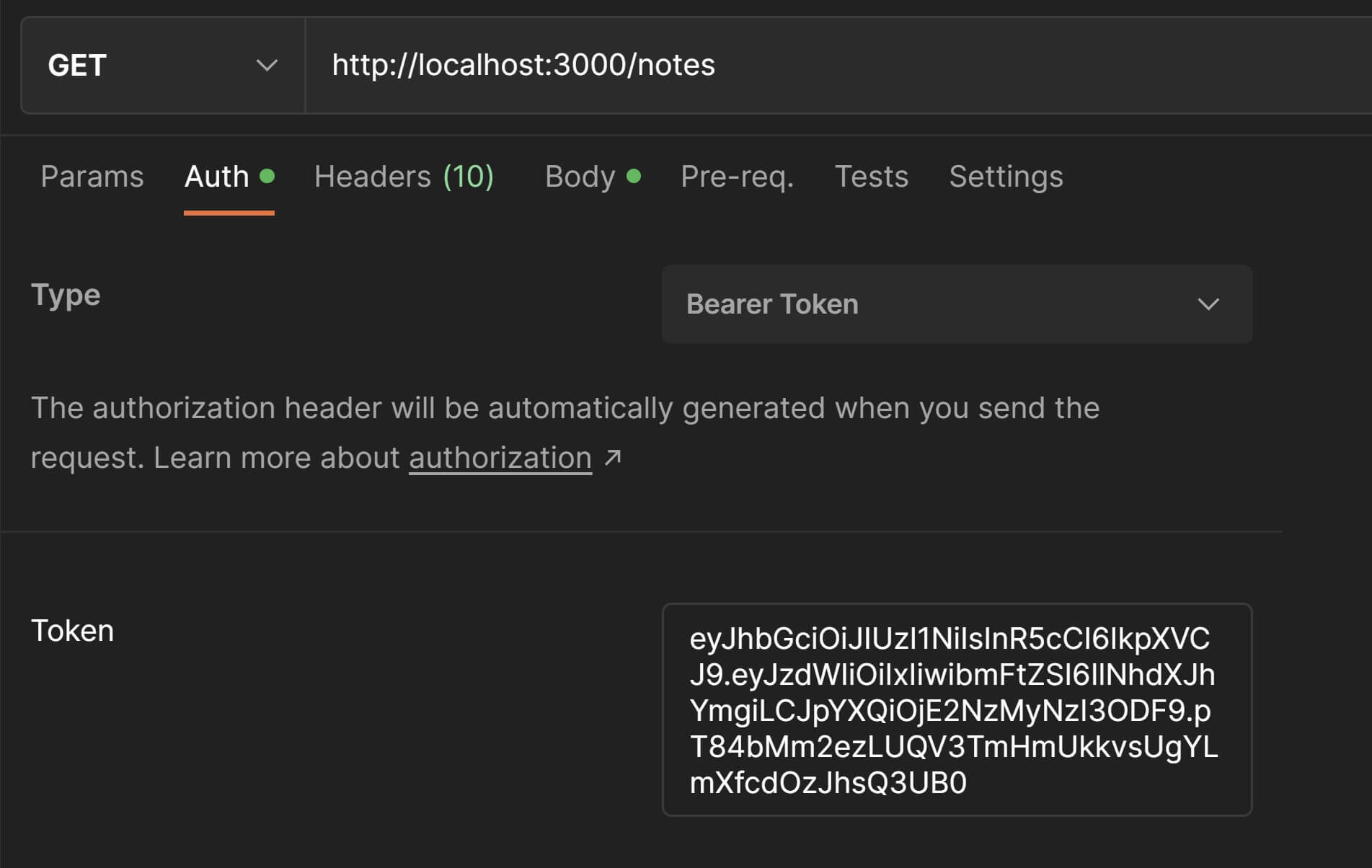

Now in order to fetch all notes, we need to include the Authorization header in our request and provide a Bearer <token> as the value of this header. To do this, simply send a GET request to the /auth endpoint with the username and password of the user you wish to login with from data.js. The response will have the token in the header X-Access-Token as demonstrated previously.

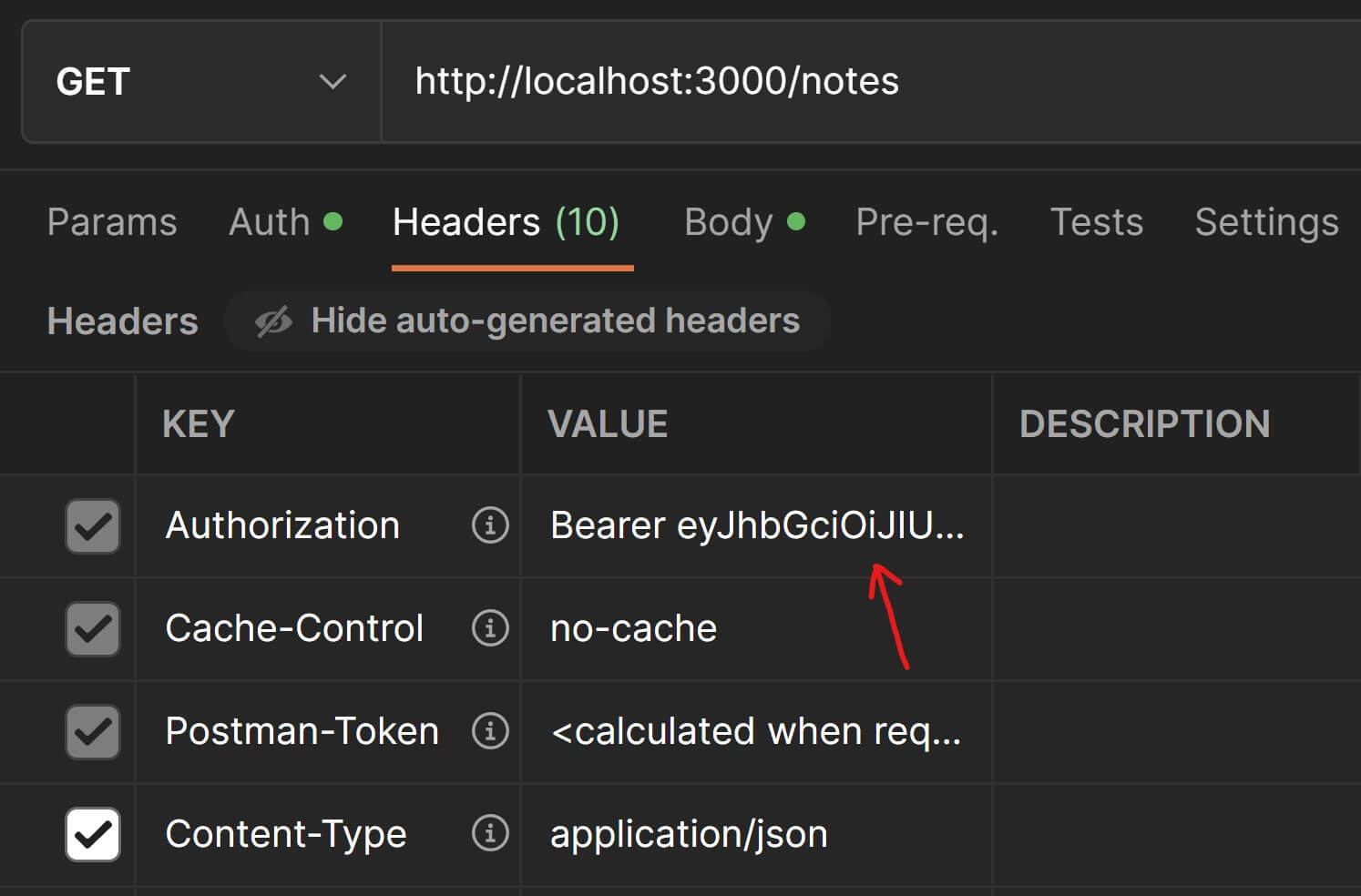

Copy this token, keep the method set to GET. Modify the URL in Postman to /notes. Go the Auth tab in the Request Panel and select Bearer Token as the value of the Type field and paste the copied token.

Postman will automatically include this in the request headers as a key-value pair which you can confirm by going to the Headers tab in the Request Panel.

Now hit Send and check the response. You will no longer get the error and will be able to fetch all the notes just like before.

BUT WAIT✋! Right now we're able to fetch all the notes in data.js, even the ones that belong to a different user than the one we have authenticated with. We'll need to make sure that users can only access notes that are associated with them and not someone else's notes.

This is currently true for the getNotes(), getNote() and other singleton resource route handlers which are able to access all notes irrespective of which user they belong to. Let's remedy this quickly.

First, let's include the usersController.js file in noteHandlers.js at the top.

const { getUserById } = require( "../controllers/usersController" );Now go to notesController.js and delete the function definition for getAllNotes() controller function. This function was only required as long as we had not implemented authorization and authentication. We don't need it anymore because we don't want any request to retrieve all notes from our database.

Next, let's replace the call to this deleted function in the getNotes() route handler, with the controller function getNotesByIds(). Replace the function definition for getNotes() with this:

exports.getNotes = ( req, res ) => { // get all info about the user const user = getUserById( res.locals.user.sub );

// get array of note objects from the array of note IDs let responseNotes = notesCtrl.getNotesByIds( user.notes );

// send a `200 OK` response with the note objects in the response payload res.json( hateoasify( responseNotes ) );}If you're wondering what res.locals.user is then it is where the auth.js middleware stores the decoded payload from the authorization token. So res.locals.user.sub is the currently authenticated user's ID.